| MySQL DBAs and developers: oil your fingers and get ready to experience a new dimension of data replication. I am pleased to announce that Continuent has just released Tungsten Replicator 2.0, an open source data replication engine that can replace MySQL native replication with a set of advanced features.

A note about the source code. The current version of Tungsten Replicator available in the web site is free to use, but it is not yet the open source version. We need a few weeks more to extract the code from the enterprise tree and make a new build. But we did not want to delay the user experience. So everything that is in this build will come with the source code in a short while. In the meantime, enjoy what is available there and have as much fun as we are having.

|

Why you will want to install Tungsten Replicator 2.0

Tungsten Replicator has a real cool

list of features. I am sure that most MySQL DBAs would find something in that list that makes their mouth water in expectation.

Among my favorite features, there is one that looks so innocently non-important that you may be tempted to dismiss it. I am talking about global transaction ID, which is paramount in helping the DBA in switching from master to slave in case of failure or maintenance. I will show an example of a seamless failover in this article.

More things to get excited about: Tungsten allows multiple master replication, i.e. one slave receiving data from several sources, and parallel replication, meaning that a slave can apply changes from the master using many parallel threads. I will talk about all of those features in my blog. But to get to that point, I will need to start by covering the basic installation first. Since Tungsten is much more powerful than MySQL native replication, it also comes with greater complexity. We are working at reducing such complexity. In the meantime, you can start with the instructions that come in this post.

Getting ready

You will need at least two servers, with Java 1.6, Ruby 1.8, and MySQL 5.1 installed.

You may use your own virtual machines, or spare servers, or you can use a pre-defined VMWare image that you can use with VMware player (or VMware Fusion on Mac).

The following instructions refer to the pre-configured VM. You may skip the initial steps if you are using your own servers.

- download a pre-configured image

https://files.continuent.com.s3.amazonaws.com/Tungsten_MySQL_CentOS_5_5_VMWare_Image.7z

Warning: it's 1.5 GB, and it expands to 5.5 GB

- Expand the VM

- Make a copy of the VM. Change the directory names so that you will refer to them as tungsten_vm1 and tungsten_vm2

- launch both VMs

- Connect to each VM. User names and password for root are in a .doc file within the VM directory.

- Change the hostname of the VMs to tungsten1 and tungsten2 (don't forget to modify /etc/sysconfig/network to make the name sticky)

- Update /etc/hosts/ with the IP address and hostname of both servers

- Switch to the tungsten user

su - tungsten

- Create a directory $HOME/replicator

- Get the Tungsten package into that directory

cd replicator

wget https://s3.amazonaws.com/releases.continuent.com/tungsten-replicator-2.0.0.tar.gz

- Get the setup scripts from Tungsten Replicator home .

wget http://tungsten-replicator.googlecode.com/files/simple_install_master_slave.tar.gz

- unpack the scripts in $HOME/replicator

I know this was a long list, but it is not terribly difficult. More difficult would be setting all the above manually. As it is today, all you need to do is running the "set_replication.sh" script and Tungsten will come alive to your server in less than one minute.

To do things properly, you will need to do the same operations on both servers. So, assuming that you have done everything in

tungsten1, you can easily mirror the operations to

tungsten2. The virtual machines come with an already installed public SSH key that makes your installation life easier.

# in tungsten1

cd $HOME/replicator

ssh tungsten2 mkdir replicator

scp simple_install_master_slave.tar.gz tungsten2:$PWD

scp tungsten-replicator-2.0.0.tar.gz tungsten2:$PWD

ssh tungsten2 'cd replicator; tar -xzf simple_install_master_slave.tar.gz '

Now that you have the same set of files in both machines, you can trust the wisdom of the installation files and run:

# tungsten1

./set_replication.sh

ssh tungsten2 $PWD/set_replication.sh

This will start the Tungsten replicator in both servers.

Cleaning up

The sample scripts come with one that is dedicated to cleaning up. There is a "clear_cluster.sh" script that will remove all test data from the database, sweep the tungsten directory away, leaving your system ready to start afresh. As this is a testing environment, this strategy is not so bad. But be aware of the potentially destructive nature of this script, and don't use it in a production environment.

Under the hood

Tungsten replicator is a complex piece of software, and it's easy to get lost. So here are a few tips on how to get your bearings.

You will find a log file under $HOME/replicator/tungsten/tungsten-replicator/logs/.

This is quite a noisy log, which is supposed to give the developers all information about what's going on in case of a failure. For newcomers, it is quite intimidating, but we are working at making it easier to read. (

Be aware that you may find some references to "tungsten-enterprise" in the logs. Don't let this fact deter you. We are working at splitting the former name associations from the packages, and eventually you will only find references to modules named "tungsten-replicator-something" in the logs.)

At the end of the installation, you should have seen a line inviting you to modify your path to get the replication tools available at your fingertips. Most notable is

trepctl, the

Tungsten

Replicator

Con

Tro

L.

Using this tool, you can get some information about the replicator status, and perform administrative tasks. A glimpse at the

Tungsten Replicator Guide 2.0 will give you an idea of what you can do.

For now, suffices to say that you can use trepctl to get the state of the replicator.

Try, for example, the following:

$ trepctl -host tungsten1 services

NAME VALUE

---- -----

appliedLastSeqno: 0

appliedLatency : 0.933

role : master

serviceName : logos

serviceType : local

started : true

state : ONLINE

$ trepctl -host tungsten2 services

NAME VALUE

---- -----

appliedLastSeqno: 0

appliedLatency : 0.966

role : slave

serviceName : logos

serviceType : local

started : true

state : ONLINE

The most important things here are the "state" field, and the "appliedLastSeqno", which is the global transaction ID that we have mentioned before.

If you create or modify something in the master and issue this command again, you will see that the appliedLastSeqno will increment.

You can get some of this information from the MySQL database, where Tungsten keeps a table with the latest status. You may say that this table is roughly equivalent, at least in principle, to the information in SHOW SLAVE STATUS available with native replication.

$ mysql -h tungsten1 -u tungsten -psecret \

-e 'select * from tungsten_logos.trep_commit_seqno\G'

*************************** 1. row ***************************

task_id: 0

seqno: 0

fragno: 0

last_frag: 1

source_id: tungsten1

epoch_number: 0

eventid: 000002:0000000000000416;102

applied_latency: 0

What is this "tungsten_logos' database? It is the database that Tungsten creates for each service that was installed. In this case, 'logos' is the service name contained in this sample installation. If you modify the scripts in both servers, and replace 'logos' with 'ripe_mango', you will see that Tungsten creates a 'tungsten_ripe_mango' database, with the same kind of information.

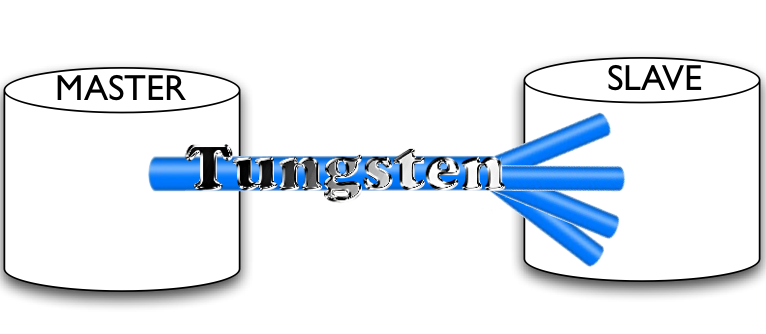

The basic principle to acquire before moving to more complex topics is that replication in Tungsten is a collection of

services. While the native MySQL replication is a simple pipeline from master to slave, without deviations, Tungsten implements several pipelines, which you can use one by one or in combination. It looks more complex than necessary, but in reality it makes your planning of complex topologies much easier. Instead of making basic replication more complex, Tungsten adopt the principle of deploying the appropriate pipeline or pipelines for the task.

I leave to

Robert Hodges, CEO and main architect of Tungsten, the task of explaining the nuts and bolts.

A sample of Tungsten power: switching from master to slave

It is probably too much information already for a blog post, but I would like to leave you with the feeling that you are dealing with an extremely powerful tool.

The instructions below will perform a seamless switch between the master and the slave.

Please follow these steps, but make sure there is no traffic hitting the old master during this time, or you may experience consistency issues:

#first, we tell both servers to stop replicating

$ trepctl -service logos -host tungsten2 offline

$ trepctl -service logos -host tungsten1 offline

# Now that they are offline, we tell each server its new role

# tungsten2 becomes the new master

$ trepctl -service logos -host tungsten2 setrole -role master

# and then we tell tungsten1 that it's going to be a slave,

# listening to tungsten2 for data

$ trepctl -service logos -host tungsten1 setrole -role slave -uri thl://tungsten2

# now we put both servers online with the new instructions

$ trepctl -service logos -host tungsten2 online

$ trepctl -service logos -host tungsten1 online

# and we check that indeed they are both online with the new roles.

$ trepctl -host tungsten1 services

$ trepctl -host tungsten2 services

After this set of instructions, tungsten2 is the master, and if we write to it, we will see the changes replicating to tungsten1.

That's it for today. In the next articles, we will take a look at parallel replication.

We want to hear from you

We have released Tungsten Replicator as open source because we believe this will improve the quality of our product. We are looking for bug reports, cooperation, suggestions, patches, and anything that can make the product better. You can report bugs at

the project home.

We are particularly eager to hear about user experience. We are aware that the user interface can be better, and we need some input on this matter from interested users.

A note about security

What is described in this article is for testing purposes only. Please use the virtual machines that were mentioned in this article behind a firewall. The VM was designed with friendliness in mind, but as it is, it's far from secure.