| I hope you liked the first part of this series of lessons. And I really hope that you have followed the instructions and got your little replication cluster up and working. |

For the sake of the diligent readers who have followed the instructions with the first lessons, I won't repeat them, but I'll invite you to set the environment as explained in the first part.

Once you have a cluster up and running, and you can confirm that replication is indeed working with Tungsten, you can remove all with the

clear_cluster.sh script, and launch again the set_installation.sh script, with the tiny detail we have mentioned above.The astute readers may have noticed that the installation script contains these lines:

...

MORE_OPTIONS=$1

./configure-service --create --role=master $MORE_OPTIONS logos1

./tungsten-replicator/bin/trepctl -service logos1 start

./configure-service --create --role=slave --master-host=QA2 \

--service-type=remote $MORE_OPTIONS logos2

...

This means that you can start set_replication.sh with one additional option, which will be passed to the creation of the Tungsten service. Without further suspense, the addition that you need is --channels=5.Yep. It was that easy.

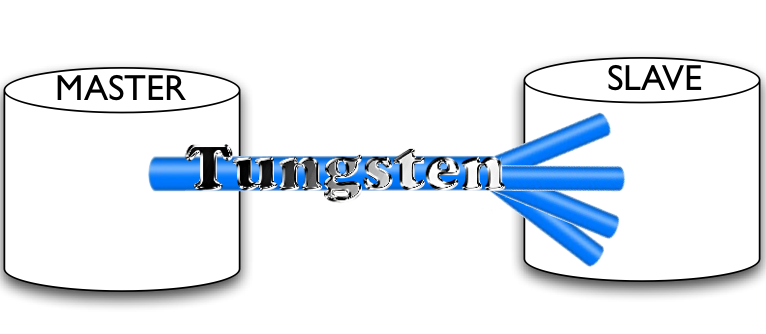

./set_replication.sh --channels=5This little addition will start your Tungsten replicator, apparently in the same way it did before. But there is a substantial difference. While the data is funneled from the master to the slaves in the usual way, the applier splits the data by database. You can see the difference as soon as you send some data through the pipeline.

#master mysql -h tungsten1 -e 'create schema mydb1' mysql -h tungsten1 -e 'create schema mydb2' mysql -h tungsten1 -e 'create schema mydb3' mysql -h tungsten1 -e 'create table mydb1.t1 (i int)' mysql -h tungsten1 -e 'create table mydb2.t1 (i int)' mysql -h tungsten1 -e 'create table mydb3.t1 (i int)' mysql -h tungsten1 -e 'select seqno,source_id,eventid from tungsten_logos.trep_commit_seqno' +-------+-----------+----------------------------+ | seqno | source_id | eventid | +-------+-----------+----------------------------+ | 6 | tungsten1 | 000002:0000000000000939;43 | +-------+-----------+----------------------------+Everything under control. The master has sent 6 events through the pipeline. Now, let's see what the slave has to say:

# slave mysql -h tungsten2 -e 'select seqno,source_id,eventid from tungsten_logos.trep_commit_seqno' +-------+-----------+----------------------------+ | seqno | source_id | eventid | +-------+-----------+----------------------------+ | 0 | tungsten1 | 000002:0000000000000426;34 | | 0 | tungsten1 | 000002:0000000000000426;34 | | 4 | tungsten1 | 000002:0000000000000763;41 | | 5 | tungsten1 | 000002:0000000000000851;42 | | 6 | tungsten1 | 000002:0000000000000939;43 | +-------+-----------+----------------------------+Notice, at first sight, that there are five rows instead of one. Each row is a channel. Since the master has used three databases, you see three channels occupied, each one showing the latest sequence that was applied. Now, if we do something to database mydb2, we should see one of these channels change, while the others stay still.

# master mysql -h tungsten1 -e 'insert into mydb2.t1 values (1)' mysql -h tungsten1 -e 'insert into mydb2.t1 values (2)' # slave mysql -h tungsten2 -e 'select seqno,source_id,eventid from tungsten_logos.trep_commit_seqno' +-------+-----------+----------------------------+ | seqno | source_id | eventid | +-------+-----------+----------------------------+ | 0 | tungsten1 | 000002:0000000000000426;34 | | 0 | tungsten1 | 000002:0000000000000426;34 | | 4 | tungsten1 | 000002:0000000000000763;41 | | 8 | tungsten1 | 000002:0000000000001124;45 | | 6 | tungsten1 | 000002:0000000000000939;43 | +-------+-----------+----------------------------+The channel used by mydb2 had previously applied the sequence number 5. The latest sequence number was previously 6, used in another channel. After two more events in this database, the sequence number has jumped to 8.

The eventID has also changed. The first part of the eventID is the binary log number (as in mysql-bin.000002), the second is the log position (1124), and the third one is the session ID (45).

Enough of peeking over the replicator's shoulder. There are more tools that let you inspect the status of the operations.

We have seen

trepctl services, which keeps some of its usefulness also with parallel replication. In the master, it says:trepctl -host tungsten1 services NAME VALUE ---- ----- appliedLastSeqno: 8 appliedLatency : 0.834 role : master serviceName : logos serviceType : local started : true state : ONLINEWhich is mostly all we need to know.

Since the slave has more than one channel, though, we need more specialized information on that side of the applier. For this reason, we use a more specialized view. We may start with

trepctl status, which has information that is roughly equivalent to "SHOW SLAVE STATUS" in MySQL native replication.trepctl -host tungsten2 status NAME VALUE ---- ----- appliedLastEventId : 000002:0000000000000426;34 appliedLastSeqno : 0 appliedLatency : 0.846 clusterName : currentEventId : NONE currentTimeMillis : 1298626724016 dataServerHost : tungsten2 extensions : host : null latestEpochNumber : 0 masterConnectUri : thl://tungsten1:2112/ masterListenUri : thl://tungsten2:2112/ maximumStoredSeqNo : 8 minimumStoredSeqNo : 0 offlineRequests : NONE pendingError : NONE pendingErrorCode : NONE pendingErrorEventId : NONE pendingErrorSeqno : -1 pendingExceptionMessage: NONE resourcePrecedence : 99 rmiPort : -1 role : slave seqnoType : java.lang.Long serviceName : logos serviceType : local simpleServiceName : logos siteName : default sourceId : tungsten2 state : ONLINE timeInStateSeconds : 3483.836 uptimeSeconds : 3489.47Also this command, which is perfectly useful in single channel replication, lacks the kind of detail that we are after. Tungsten 2.0 introduces two variations of this command, with more detailed metadata.

trepctl -host tungsten2 status -name tasks Processing status command (tasks)... NAME VALUE ---- ----- appliedLastEventId: 000002:0000000000001305;46 appliedLastSeqno : 8 appliedLatency : 0.84 cancelled : false eventCount : 9 stage : remote-to-thl taskId : 0 NAME VALUE ---- ----- appliedLastEventId: 000002:0000000000001305;46 appliedLastSeqno : 8 appliedLatency : 0.841 cancelled : false eventCount : 9 stage : thl-to-q taskId : 0 NAME VALUE ---- ----- appliedLastEventId: 000002:0000000000000426;34 appliedLastSeqno : 0 appliedLatency : 8.422 cancelled : false eventCount : 2 stage : q-to-dbms taskId : 0 NAME VALUE ---- ----- appliedLastEventId: 000002:0000000000000426;34 appliedLastSeqno : 0 appliedLatency : 8.424 cancelled : false eventCount : 1 stage : q-to-dbms taskId : 1 NAME VALUE ---- ----- appliedLastEventId: 000002:0000000000000763;41 appliedLastSeqno : 4 appliedLatency : 0.242 cancelled : false eventCount : 3 stage : q-to-dbms taskId : 2 NAME VALUE ---- ----- appliedLastEventId: 000002:0000000000001305;46 appliedLastSeqno : 8 appliedLatency : 0.846 cancelled : false eventCount : 5 stage : q-to-dbms taskId : 3 NAME VALUE ---- ----- appliedLastEventId: 000002:0000000000000939;43 appliedLastSeqno : 6 appliedLatency : 0.296 cancelled : false eventCount : 3 stage : q-to-dbms taskId : 4The

-name tasks command gives you a list of the latest tasks that were happening.This is probably more information that you want to know about, but in case of troubleshooting it may become a blessing. Let's follow for a moment what's going on to appliedLastSeqno 8. You will find three tasks with this sequance number. The first one has stage "remote-to-thl", which is the stage where the transaction is transported from the master to the Transaction History List (THL, which is Tungsten lingo to what you may also call a relay log.). The second task that mentions appliedLastSeqno 8 is in stage "thl-to-q", which is the phase where a transaction is assigned to a given shard. The third occurrence happens in stage "q-to-dbms", which is where the transaction is executed in the slave.

For a different view of what is going on, you may use

trepctl status -name shards. A Shard, in this context, is the criteria used to split the transactions across channels. By default, it happens by database. We will inspect its mechanics more closely in another post. For now, let's have a look at what shards we have in our slave:trepctl -host tungsten2 status -name shards NAME VALUE ---- ----- appliedLastEventId: 000002:0000000000000763;41 appliedLastSeqno : 4 appliedLatency : 0.0 eventCount : 2 shardId : mydb1 stage : q-to-dbms NAME VALUE ---- ----- appliedLastEventId: 000002:0000000000001305;46 appliedLastSeqno : 8 appliedLatency : 0.0 eventCount : 4 shardId : mydb2 stage : q-to-dbms NAME VALUE ---- ----- appliedLastEventId: 000002:0000000000000939;43 appliedLastSeqno : 6 appliedLatency : 0.0 eventCount : 2 shardId : mydb3 stage : q-to-dbms NAME VALUE ---- ----- appliedLastEventId: 000002:0000000000000426;34 appliedLastSeqno : 0 appliedLatency : 0.0 eventCount : 6 shardId : tungsten_logos stage : q-to-dbmsYou may read the information quite easily. Each shard tells you by which key it was identified (

shardID), and this is the same as the database name. The appliedLastSeqno and stage we have met already. The appliedLatency is roughly equivalent to MySQL's seconds behind master, but more granular than that. And eventCount tells you how many transactions went through this shard.If you are the adventurous type, you may have a look at the THL itself, and get a glimpse of how the replication and the parallelism works.

In the slave, type the following

# slave thl -service logos list |lessThen look for "SEQ#" and you will find the global transaction IDs, or look for "shard=", and you will see the split by database.

More goodies will come next week. Until then, happy hacking!

2 comments:

Is slave replication state crash proof? I am in the middle of fixing an obscure bug in rpl_transaction_enabled from the Google/Facebook patches. I do not enjoy maintaining that code.

In general yes. We commit current locations in the trep_commit_seqno table as well as persistently in the disk storage. However, there's no two-phase commits--it's more like sliding window messaging protocol. When there's a recovery we propagate recovery points backwards through the messaging flow then re-extract transactions where in doubt. Appliers are taught to discard things they have already seen.

The particular "no" would be if you had a crash on the master *and* slave disk failure simultaneously. In this case some transaction loss might occur. However, that's thankfully very rare.

Post a Comment