Intro

Tungsten Replicator is an open source tool that does high performance replication across database servers. It was designed to replace MySQL replication, although it also supports replication from and to Oracle and other systems. In this article, we will only cover MySQL replication, both simple and multi-master.

Preparing for installation

To follow the material in this article, you will need a recent build of Tungsten Replicator. You can get the latest ones from http://bit.ly/tr20_builds. In this article, we are using build 2.0.8-167.

Before starting any installation, you should make sure that you have satisfied all the prerequisites. Don't underestimate the list. Any missing items will likely result in installation errors.

If you are using Amazon EC2 servers, this page provides a script that makes the prerequisites an almost fully automated procedure.

To install any of the topologies supported by Tungsten, you need first to extract the software, define your nodes, and eventually change your default options.

- Download the software from http://bit.ly/tr20_builds

- Expand the tarball (

tar -xzf tungsten-replicator-2.0.8-136.tar.gz)

- Change directory to the extracted path (

cd tungsten-replicator-2.0.8-167)

- Define the VERBOSE user variable (it will show much details in the operations

- Edit the configuration files COMMON_NODES.sh and USER_VALUES.sh (The fields in RED are the ones that you should probably change.)

$ tar -xzf tungsten-replicator-2.0.8-167.tar.gz

$ cd tungsten-replicator-2.0.8-167

$ export VERBOSE=1

$ export PATH=$PWD/cookbook:$PATH

$ cat cookbook/COMMON_NODES.sh

#!/bin/bash

# (C) Copyright 2012,2013 Continuent, Inc - Released under the New BSD License

# Version 1.0.5 - 2013-04-03

export NODE1=host1

export NODE2=host2

export NODE3=host3

export NODE4=host4

$ cat cookbook/USER_VALUES.sh

#!/bin/bash

# (C) Copyright 2012,2013 Continuent, Inc - Released under the New BSD License

# Version 1.0.5 - 2013-04-03

# User defined values for the cluster to be installed.

# Where to install Tungsten Replicator

export TUNGSTEN_BASE=$HOME/installs/cookbook

# Directory containing the database binary logs

export BINLOG_DIRECTORY=/var/lib/mysql

# Path to the options file

export MY_CNF=/etc/my.cnf

# Database credentials

export DATABASE_USER=tungsten

export DATABASE_PASSWORD=secret

export DATABASE_PORT=3306

# Name of the service to install

export TUNGSTEN_SERVICE=cookbook

Pay attention to the paths. TUNGSTEN_BASE is where the binaries will be installed. You must make sure that:

- The path is writable by the current user, in all nodes;

- There is nothing in that path that may clash with the software to be installed. It should be a dedicated directory. Do NOT use your $HOME for this purpose. Use a subdirectory or a path under /usr/local or /opt/

- The path must have enough storage to hold Tungsten Transaction History Logs (THL). They will occupy roughly twice as much as your binary logs.

Validating your nodes

You may think that you have followed the instructions for the prerequisites, but sometimes humans make mistakes. To make sure that your cluster can run Tungsten, you can run the script validate_cluster. This is an operation that performs all the installation checks in all nodes, without actually installing anything.

$ cookbook/validate_cluster

# Performing validation check ...

## 1 (host: host4)

./tools/tungsten-installer \

--master-slave \

--master-host=host1 \

--datasource-user=tungsten \

--datasource-password=secret \

--datasource-port=3306 \

--service-name=cookbook \

--home-directory=/home/tungsten/installs/cookbook \

--cluster-hosts=host1,host2,host3,host4 \

--datasource-mysql-conf=/etc/my.cnf \

--datasource-log-directory=/var/lib/mysql \

--rmi-port=10000 \

--thl-port=2112 \

--validate-only -a \

--info \

--start

INFO >> Start: Check that the master-host is part of the config

INFO >> Finish: Check that the master-host is part of the config

#####################################################################

# Tungsten Community Configuration Procedure

#####################################################################

NOTE: To terminate configuration press ^C followed by ENTER

...

...

...

( LOTS OF LINES FOLLOW)

If there is any error in your prerequisites, you will get an error, or possibly more than one. If the messages provided by this command are not enough to understand what it is going on, you can ask for yet more detail, using:

$ VERBOSE=2 ./cookbook/validate_cluster

DRY-RUN installation

Should you need to install in a set of nodes where you can't allow ssh connection across nodes, you may use the DRYRUN variable.

$ export DRYRUN=1

When this variable is set, the installation commands will not install, but only show you all the commands that you should run, with the right sequence.

For example, if you want to validate the cluster without SSH communication between nodes, a DRYRUN command will tell you the list of instructions to run and in which hosts to run them

$ DRYRUN=1 ./cookbook/validate_cluster

# Performing validation check ...

...

Using the instructions so received, you can copy the software to each node, and run the appropriate command in each one.

The same goes for every installation command. Should you need to install a star topology node by node with custom options, just run:

$ DRYRUN=1 ./cookbook/install_star

When you don't need the DRYRUN command anymore, remove the variable:

$ unset DRYRUN

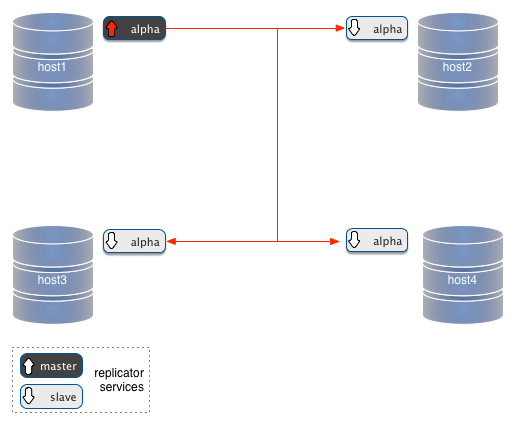

Installing a master-slave topology

After the validation, you can launch your installation. If the topology is master/slave, the defaults are stored in cookbook/NODES_MASTER_SLAVE.sh

$ cat cookbook/NODES_MASTER_SLAVE.sh

#!/bin/bash

# (C) Copyright 2012,2013 Continuent, Inc - Released under the New BSD License

# Version 1.0.5 - 2013-04-03

CURDIR=`dirname $0`

if [ -f $CURDIR/COMMON_NODES.sh ]

then

. $CURDIR/COMMON_NODES.sh

else

export NODE1=

export NODE2=

export NODE3=

export NODE4=

fi

export ALL_NODES=($NODE1 $NODE2 $NODE3 $NODE4)

# indicate which servers will be masters, and which ones will have a slave service

# in case of all-masters topologies, these two arrays will be the same as $ALL_NODES

# These values are used for automated testing

#for master/slave replication

export MASTERS=($NODE1)

export SLAVES=($NODE2 $NODE3 $NODE4)

The only variables that should concern you here are MASTERS and SLAVES. They refer to the nodes defined in COMMON_NODES.sh. If your master is NODE1, there is no need to change anything. If your master is, say, NODE2, then change the variables as:

export MASTERS=($NODE2)

export SLAVES=($NODE1 $NODE3 $NODE4)

Make sure that you have adjusted both master and slave definitions.

$ cookbook/install_master_slave

## 1 (host: host4)

./tools/tungsten-installer \

--master-slave \

--master-host=host1 \

--datasource-user=tungsten \

--datasource-password=secret \

--datasource-port=3306 \

--service-name=cookbook \

--home-directory=/home/tungsten/installs/cookbook \

--cluster-hosts=host1,host2,host3,host4 \

--datasource-mysql-conf=/etc/my.cnf \

--datasource-log-directory=/var/lib/mysql \

--rmi-port=10000 \

--thl-port=2112 \

--start

... # A few minutes later ...

--------------------------------------------------------------------------------------

Topology: 'MASTER_SLAVE'

--------------------------------------------------------------------------------------

# node host1

cookbook [master] seqno: 1 - latency: 0.631 - ONLINE

# node host2

cookbook [slave] seqno: 1 - latency: 0.607 - ONLINE

# node host3

cookbook [slave] seqno: 1 - latency: 0.746 - ONLINE

# node host4

cookbook [slave] seqno: 1 - latency: 0.640 - ONLINE

Deployment completed

Topology :'master_slave'

Tungsten path : /home/tungsten/installs/cookbook

Nodes : (host1 host2 host3 host4)

After installing all the nodes, the cookbook script displays the cluster status. In this list, 'cookbook' is the name of the replication service, as defined in USER_VALUES.sh. You can change it before installing. Any name will do. Next to it you see the role (master or slave), then the 'seqno', which is the global transaction ID of your database events. Finally, the 'latency' is the difference, in seconds, between the time your transaction was recorded in the master binary logs and the time it was applied to the slave.

You can ask for such a status at any time, by calling:

$ cookbook/show_cluster

Simple replication administration

A cluster status doesn't tell you if replication is working. You may check if this is true by running:

$ cookbook/test_cluster

# --------------------------------------------------------------------------------------

# Testing cluster with installed topology 'MASTER_SLAVE'

# --------------------------------------------------------------------------------------

ok - Master host1 has at least 1 master services

# slave: host2

ok - Tables from master #1

ok - Views from master #1

ok - Records from master #1

ok - Slave host2 has at least 1 services

# slave: host3

ok - Tables from master #1

ok - Views from master #1

ok - Records from master #1

ok - Slave host3 has at least 1 services

# slave: host4

ok - Tables from master #1

ok - Views from master #1

ok - Records from master #1

ok - Slave host4 has at least 1 services

1..13

This command creates a table and a view in each master in your topology (in this case, a master/slave topology has only one master), insert a record using the view, and then check that each slave has replicated what was inserted. The output changes quite a lot when using a multi-master topology.

Astute readers will recognize that the output produced here complies with the Test Anything Protocol (TAP). If you have the 'prove' tool installed in your server, you may try it:

$ prove cookbook/test_cluster

cookbook/test_cluster...ok

All tests successful.

Files=1, Tests=13, 4 wallclock secs ( 3.17 cusr + 0.26 csys = 3.43 CPU)

Replication tools

The cluster status shown above (cookbook/show_cluster) uses the output of the Tungsten built-in tool trepctl to display a simplified status.

The tool is available inside the installation directory. If you have used the defaults, it is $HOME/installs/cookbook. ANd the tools are in $HOME/installs/cookbook/tungsten/tungsten-replicator/bin/.

This is not easy to remember, and even if you can remember it correctly, it requires a lot of typing. The cookbook provides an easy shortcut: cookbook/trepctl. For example:

$ cookbook/trepctl services

Processing services command...

NAME VALUE

---- -----

appliedLastSeqno: 17

appliedLatency : 0.773

role : slave

serviceName : cookbook

serviceType : local

started : true

state : ONLINE

Finished services command...

Or, if you want the simplified output:

$ cookbook/trepctl services | cookbook/simple_services

cookbook [slave] seqno: 17 - latency: 0.773 - ONLINE

To administer the system properly, you need to know the tools, some paths to the logs and the configuration files, which are somehow elusive. Again, the cookbook to the rescue:

$ cookbook/paths

replicator : /home/tungsten/installs/cookbook/tungsten/tungsten-replicator/bin/replicator

trepctl : /home/tungsten/installs/cookbook/tungsten/tungsten-replicator/bin/trepctl

thl : /home/tungsten/installs/cookbook/tungsten/tungsten-replicator/bin/thl

log : /home/tungsten/installs/cookbook/tungsten/tungsten-replicator/log/trepsvc.log

conf : /home/tungsten/installs/cookbook/tungsten/tungsten-replicator/conf/

thl-dir : (service: cookbook) /home/tungsten/installs/cookbook/thl/cookbook

backup-dir : (service: cookbook) /home/tungsten/installs/cookbook/backups/cookbook

backup-agent : (service: cookbook) mysqldump

This command tells you the path to the three main tools:

- trepctl Tungsten Replicator Control

- thl or the Transaction History Log manager

- replicator, which is the launcher for the replicator daemon.

You also get the path to the most common places you may need to access during your administrative tasks.

Similarly, there are shortcuts to perform common tasks:

- cookbook/replicator: Shortcut to the 'replicator' command

- cookbook/trepctl: Shortcut to the 'trepctl' command

- cookbook/thl: Shortcut to the 'thl' command

- cookbook/conf: Shows the configuration files using 'less'

- cookbook/show_conf: Same as 'conf'

- cookbook/edit_conf: Edits the configuration files using 'vim'

- cookbook/vimconf: Same as 'vimconf.sh'

- cookbook/emacsconf: Edits the configuration files using 'emacs'

- cookbook/log: Shows the replicator log using 'less'

- cookbook/show_log: Same as 'log'

- cookbook/vilog: Edits the replicator log using 'vi'

- cookbook/vimlog: Edits the replicator log using 'vim'

- cookbook/emacslog: Edits the replicator log using 'emacs'

- cookbook/heartbeat: Performs a heartbeat in each master

- cookbook/paths: Shows the path to all important tools and services

- cookbook/services: Performs 'trepctl services'

- cookbook/backups: Shows which backups were taken in all nodes

You can get all the above commands, and all the others included in the cookbook, by calling:

$ cookbook/help

Uninstalling Tungsen

The cookbook makes it easy to install a replication cluster, and makes it easy to remove it as well.

If you look at the end of cookbook/USER_VALUES.sh, you will see these variables:

$ tail -n 12 cookbook/USER_VALUES.sh

##############################################################################

# Variables used when removing the cluster

# Each variable defines an action during the cleanup

##############################################################################

[ -z "$STOP_REPLICATORS" ] && export STOP_REPLICATORS=1

[ -z "$REMOVE_TUNGSTEN_BASE" ] && export REMOVE_TUNGSTEN_BASE=1

[ -z "$REMOVE_SERVICE_SCHEMA" ] && export REMOVE_SERVICE_SCHEMA=1

[ -z "$REMOVE_TEST_SCHEMAS" ] && export REMOVE_TEST_SCHEMAS=1

[ -z "$REMOVE_DATABASE_CONTENTS" ] && export REMOVE_DATABASE_CONTENTS=0

[ -z "$CLEAN_NODE_DATABASE_SERVER" ] && export CLEAN_NODE_DATABASE_SERVER=1

##############################################################################

The names are self-explanatory. These variables are used when you call the clear_cluster command. Then, the meaning becomes even more clear:

$ cookbook/clear_cluster

--------------------------------------------------------------------------------------

Clearing up cluster with installed topology 'MASTER_SLAVE'

--------------------------------------------------------------------------------------

!!! WARNING !!!

--------------------------------------------------------------------------------------

'clear-cluster' is a potentially damaging operation.

This command will do all the following:

* Stop the replication software in all servers. [$STOP_REPLICATORS]

* REMOVE ALL THE CONTENTS from /home/tungsten/installs/cookbook/.[$REMOVE_TUNGSTEN_BASE]

* REMOVE the tungsten_<service_name> schemas in all nodes (host1 host2 host3 host4) [$REMOVE_SERVICE_SCHEMA]

* REMOVE the schemas created for testing (test, evaluator) in all nodes (host1 host2 host3 host4) [$REMOVE_TEST_SCHEMAS]

* Create the test server anew; [$CLEAN_NODE_DATABASE_SERVER]

* Unset the read_only variable; [$CLEAN_NODE_DATABASE_SERVER]

* Set the binlog format to MIXED; [$CLEAN_NODE_DATABASE_SERVER]

* Reset the master (removes all binary logs); [$CLEAN_NODE_DATABASE_SERVER]

If this is what you want, either set the variable I_WANT_TO_UNINSTALL

or answer 'y' to the question below

You may also set the variables in brackets to fine tune the execution.

Alternatively, have a look at cookbook/clear_cluster and customize it to your needs.

--------------------------------------------------------------------------------------

Do you wish to uninstall this cluster? [y/n]

As you can see, for each action there is a corresponding variable. By default, all variables are active, except 'REMOVE_DATABASE_CONTENTS'. Setting or unsetting these variables will determine how much of your installation you want to undo.

Getting replication status

Once you have replication up and running, you need to know what's going on at a glance. We have seen in the previous sections that we can call trepctl services to get an overview of the replication process. Using the same tool, we can also get more detailed information

$ cookbook/trepctl status | nl

1 Processing status command...

2 NAME VALUE

3 ---- -----

4 appliedLastEventId : mysql-bin.000006:0000000000003163;0

5 appliedLastSeqno : 17

6 appliedLatency : 0.773

7 channels : 1

8 clusterName : default

9 currentEventId : NONE

10 currentTimeMillis : 1365193975129

11 dataServerHost : host4

12 extensions :

13 latestEpochNumber : 0

14 masterConnectUri : thl://host1:2112/

15 masterListenUri : thl://host4:2112/

16 maximumStoredSeqNo : 17

17 minimumStoredSeqNo : 0

18 offlineRequests : NONE

19 pendingError : NONE

20 pendingErrorCode : NONE

21 pendingErrorEventId : NONE

22 pendingErrorSeqno : -1

23 pendingExceptionMessage: NONE

24 pipelineSource : thl://host1:2112/

25 relativeLatency : 22729.129

26 resourcePrecedence : 99

27 rmiPort : 10000

28 role : slave

29 seqnoType : java.lang.Long

30 serviceName : cookbook

31 serviceType : local

32 simpleServiceName : cookbook

33 siteName : default

34 sourceId : host4

35 state : ONLINE

36 timeInStateSeconds : 23640.125

37 uptimeSeconds : 23640.723

38 version : Tungsten Replicator 2.0.8 build 136

39 Finished status command...

With the line number as a reference, we can describe quite a bit of useful information:

- appliedLastEventId: (4) This is the event as found in the source database master. Since we are replicating from a MySQL server (don't forget that Tungsten can replicate from and to several heterogeneous servers) this ID is made of the binary log file name (mysql-bin.000006) and the binary log position (0000000000003163). Most of the time, you don't really need this information, as everything in Tungsten uses the Global Transaction ID (see next item)

- appliedLastSeqno: (5) This is the Global Transaction Identifier for the current transaction.

- appliedLatency: (6) This is the time difference, in seconds, between the moment when the transaction was written to the binary log in the master and the moment when it was applied in the slave. Notice that, if the server system times are not synchronized, you may have greater differences than expected. Also, if you keep a slave offline and re-connect it later, this value will increase accordingly.

- channels (7) is the number of threads used for replication. By default it is 1. When using parallel replication, it increases.

- dataServerHost: (11) The server for which we are showing the status.

- masterConnectUri (14) is the address (hostname or IP + port ) of the current master for this service.

- masterListenUri (15) is the address that will be used by the current server if it becomes a master.

- pendingErrorSeqno: (22) When any of the error* lines (19 to 21) are used, this line shows the seqno (Global Transaction ID) of the event that is causing trouble. This piece of information is vital to find what is holding the system. (We will see an example later in this article)

- role: (28) What is the role of this service. It could be 'master' or 'slave'. More roles are possible if the replicator is embedded in a more complex system.

- serviceName: (30) The identification of the replication service. Not much important when using a master/slave topology, but vital when deploying multi-master services.

- state: (35) It's what the replicator is doing. If "ONLINE," all is well. "OFFLINE:NORMAL" means that the service was stopped manually, while "OFFLINE:ERROR" means that something is wrong. If you see "GOING-ONLINE:SYNCHRONIZING," it means that either there is a connection issue between master and slave, or the slave is showing this state if the master is offline.

This command is the first step whenever you are troubleshooting a problem. If something goes wrong, chances are that 'cookbook/trepctl status' will tell you what it is going on. Notice, though, that if you are using a multi-master topology, then you will need to specify a service:

$ cookbook/trepctl -service somename status

It's quite important to understand that trepctl can give you the status of any node in the replication cluster. You don't need to execute the command in another node. All you need to do is indicate to trepctl for which host it should display the status.

$ cookbook/trepctl -host host1 -service somename status

'trepctl' has quite a lot of options, as you may discover if you run 'trepctl help'. We will see some of them in this series of articles.

Logs

The second step of troubleshooting, when 'trepctl status' was not enough to nail the problem, is looking at the logs.

Here, the problem you will face is "where the heck do I find the logs?"

As we have seen above in this article, the cookbook can show you the paths:

$ cookbook/paths

replicator : /home/tungsten/installs/cookbook/tungsten/tungsten-replicator/bin/replicator

trepctl : /home/tungsten/installs/cookbook/tungsten/tungsten-replicator/bin/trepctl

thl : /home/tungsten/installs/cookbook/tungsten/tungsten-replicator/bin/thl

log : /home/tungsten/installs/cookbook/tungsten/tungsten-replicator/log/trepsvc.log

conf : /home/tungsten/installs/cookbook/tungsten/tungsten-replicator/conf/

thl-dir : (service: cookbook) /home/tungsten/installs/cookbook/thl/cookbook

backup-dir : (service: cookbook) /home/tungsten/installs/cookbook/backups/cookbook

backup-agent : (service: cookbook) mysqldump

However, there is a simpler way. You can use one of the shortcuts to access the logs. For example, cookbook/log will show the log using 'less,' the well known file viewer. Should you want to use another tool for this task, there is a wide choice:

- cookbook/show_log: Same as 'log'

- cookbook/vilog: Edits the replicator log using 'vi'

- cookbook/vimlog: Edits the replicator log using 'vim'

- cookbook/emacslog: Edits the replicator log using 'emacs'

Inside the log, when you are troubleshooting, you should first try to find the same message displayed by 'trepctl status.' Around that point, you will find one or more Java stack traces, which contain information useful for the developers (file names and line numbers) and information useful for you (error messages as reported by the database server or the operating system or third party tools, which may help identifying the problem).

Reading events

Most often, when a problem has been identified, you need to know which is the event that is causing the problem. Usually, a look at the SQL, combined with the error message, may give you enough information to fix the problem.

The replication events (or transaction) are stored in several Transaction History Log (THL) files. These files contain the events, as taken from the binary logs, plus some metadata. Unlike the binary logs, though, the THL file names are totally unimportant. Since transactions are identified by number, you don't need to know their location.

To display a THL event, you use a tool named, most aptly, 'thl.' For example, after we run this query:

mysql --host=host1 test -e "insert into v1 values (2,'inserted by node #1')"

We can check the status with

$ cookbook/trepctl services

Processing services command...

NAME VALUE

---- -----

appliedLastSeqno: 24

appliedLatency : 0.563

role : slave

serviceName : cookbook

serviceType : local

started : true

state : ONLINE

Finished services command...

And then retrieve the event using the thl.

$ cookbook/thl list -seqno 24

SEQ# = 24 / FRAG# = 0 (last frag)

- TIME = 2013-04-05 23:32:18.0

- EPOCH# = 18

- EVENTID = mysql-bin.000006:0000000000004417;0

- SOURCEID = host1

- METADATA = [mysql_server_id=10;dbms_type=mysql;service=cookbook;shard=test]

- TYPE = com.continuent.tungsten.replicator.event.ReplDBMSEvent

- OPTIONS = [##charset = ISO8859_1, autocommit = 1, sql_auto_is_null = 1, foreign_key_checks = 1, unique_checks = 1, sql_mode = '', character_set_client = 8, collation_connection = 8, collation_server = 8]

- SCHEMA = test

- SQL(0) = insert into v1 values (2,'inserted by node #1') /* ___SERVICE___ = [cookbook] */

There is much metadata in this event, most of which is easily recognizable by any seasoned DBA. Some things that may be worth pointing out are:

- SEQ# The sequence number, or seqno, or Global Transaction ID,

- EVENTID: We have seen this when we described 'trepctl status';

- SOURCEID: the server where the event was generated;

- service: The service where the event was generated. This also tells us that the master role for this service is in host1.

- shard: it is how Tungsten defines shards for parallel replication and conflict resolution. By default, a shard matches a database schema, although it can be defined otherwise.

- SQL : this is the statement being executed. When the transaction contains more than one statement, then you will see SQL(1), SQL(2), and so on. If the event was row-based, then you will see a list of column and their contents instead of a SQL statement.

- ___SERVICE___ = [cookbook] This comment is added by the replicator to make it recognizable even after it goes to the binary log and gets replicated to a further level. This is not the only method used to mark events. The service identification can go in other places, such as the "comment" field of a "CREATE TABLE" statement.

Skipping transactions

One of the most common replication problems is a duplicate key violation, which in turn often occurs when someone erroneously writes to a slave instead of a master. When such error happens, you may find something like this:

$ cookbook/trepctl status

Processing status command...

NAME VALUE

---- -----

appliedLastEventId : NONE

appliedLastSeqno : -1

appliedLatency : -1.0

channels : -1

clusterName : default

currentEventId : NONE

currentTimeMillis : 1365199283287

dataServerHost : host4

extensions :

latestEpochNumber : -1

masterConnectUri : thl://host1:2112/

masterListenUri : thl://host4:2112/

maximumStoredSeqNo : -1

minimumStoredSeqNo : -1

offlineRequests : NONE

pendingError : Event application failed: seqno=25 fragno=0 message=java.sql.SQLException:

Statement failed on slave but succeeded on master

pendingErrorCode : NONE

pendingErrorEventId : mysql-bin.000006:0000000000004622;0

pendingErrorSeqno : 25

pendingExceptionMessage: java.sql.SQLException: Statement failed on slave but succeeded on master

insert into v1 values (3,'inserted by node #1') /* ___SERVICE___ = [cookbook] */

pipelineSource : UNKNOWN

relativeLatency : -1.0

resourcePrecedence : 99

rmiPort : 10000

role : slave

seqnoType : java.lang.Long

serviceName : cookbook

serviceType : unknown

simpleServiceName : cookbook

siteName : default

sourceId : host4

state : OFFLINE:ERROR

timeInStateSeconds : 8.749

uptimeSeconds : 28948.881

version : Tungsten Replicator 2.0.8 build 136Looking at the logs, we may see something like this:

INFO | jvm 1 | 2013/04/06 00:01:14 | 2013-04-06 00:01:14,529 [cookbook - q-to-dbms-0] ERROR pipeline.SingleThreadStageTask

Event application failed: seqno=25 fragno=0 message=java.sql.SQLException: Statement failed on slave but succeeded on master

INFO | jvm 1 | 2013/04/06 00:01:14 | com.continuent.tungsten.replicator.applier.ApplierException: java.sql.SQLException: Statement failed on slave but succeeded on master

INFO | jvm 1 | 2013/04/06 00:01:14 | at com.continuent.tungsten.replicator.applier.MySQLDrizzleApplier.applyStatementData(MySQLDrizzleApplier.java:183)

INFO | jvm 1 | 2013/04/06 00:01:14 | at com.continuent.tungsten.replicator.applier.JdbcApplier.apply(JdbcApplier.java:1321)

INFO | jvm 1 | 2013/04/06 00:01:14 | at com.continuent.tungsten.replicator.applier.ApplierWrapper.apply(ApplierWrapper.java:101)

INFO | jvm 1 | 2013/04/06 00:01:14 | at com.continuent.tungsten.replicator.pipeline.SingleThreadStageTask.apply(SingleThreadStageTask.java:639)

INFO | jvm 1 | 2013/04/06 00:01:14 | at com.continuent.tungsten.replicator.pipeline.SingleThreadStageTask.runTask(SingleThreadStageTask.java:468)

INFO | jvm 1 | 2013/04/06 00:01:14 | at com.continuent.tungsten.replicator.pipeline.SingleThreadStageTask.run(SingleThreadStageTask.java:167)

INFO | jvm 1 | 2013/04/06 00:01:14 | at java.lang.Thread.run(Unknown Source)

INFO | jvm 1 | 2013/04/06 00:01:14 | Caused by: java.sql.SQLException: Statement failed on slave but succeeded on master

INFO | jvm 1 | 2013/04/06 00:01:14 | at com.continuent.tungsten.replicator.applier.MySQLDrizzleApplier.applyStatementData(MySQLDrizzleApplier.java:140)

INFO | jvm 1 | 2013/04/06 00:01:14 | ... 6 more

INFO | jvm 1 | 2013/04/06 00:01:14 | Caused by: java.sql.SQLIntegrityConstraintViolationException: Duplicate entry '3' for key 'PRIMARY'

After inspecting the tables in all nodes, we find that the host4 already contains a record with Primary Key=3, and that it has the same contents of the record coming from host1. In this case, the easiest way of fixing the error is by telling the replicator to skip this event.

$ cookbook/trepctl online -skip-seqno 25

After this, the replicator goes online, and, provided that there are no other errors after the first one, will continue replicating.

Taking over existing Replication

In the first sections of this article, we saw how to install Tungsten replicator as the primary source of replication. We assumed that the servers had the same contents, and there was no replication already going on. Here we assume, instead, that there was replication already, and we show the steps to reproduce the process.

To simulate the initial status, we're going to clear the cluster installed before, install native MySQL replication instead, and take over from there.

There is a recipe to install standard replication, just for this purpose.

$ cookbook/install_standard_mysql_replication

Starting slave on host2 Master File = mysql-bin.000005, Master Position = 106

Starting slave on host3 Master File = mysql-bin.000005, Master Position = 106

Starting slave on host4 Master File = mysql-bin.000005, Master Position = 106

# master host1

mysql-bin.000005 554

#slave host2

Master_Log_File: mysql-bin.000005

Read_Master_Log_Pos: 554

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Exec_Master_Log_Pos: 554

replication test: ok

#slave host3

Master_Log_File: mysql-bin.000005

Read_Master_Log_Pos: 554

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Exec_Master_Log_Pos: 554

replication test: ok

#slave host4

Master_Log_File: mysql-bin.000005

Read_Master_Log_Pos: 554

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Exec_Master_Log_Pos: 554

replication test: ok

The installation also provides a simple test that checks if replication is running by creating a table named 't1' and retrieving it in the slaves. As you can see, after the test, the slaves are at position 554 of binary log # 000005. If we create another table, we check that it is replicated and take nota again of the binlog position.

$ mysql -h host1 -e 'create table test.test_standard(i int)'

$ for host in host1 host2 host3 host4; do mysql -h $host -e 'show tables from test' ; done

+----------------+

| Tables_in_test |

+----------------+

| t1 |

| test_standard |

+----------------+

+----------------+

| Tables_in_test |

+----------------+

| t1 |

| test_standard |

+----------------+

+----------------+

| Tables_in_test |

+----------------+

| t1 |

| test_standard |

+----------------+

+----------------+

| Tables_in_test |

+----------------+

| t1 |

| test_standard |

+----------------+

$ for host in host2 host3 host4; do mysql -h $host -e 'show slave status\G' | grep 'Master_Log_File\|Read_Master_Log_Pos\|Running' ; done

Master_Log_File: mysql-bin.000005

Read_Master_Log_Pos: 651

Relay_Master_Log_File: mysql-bin.000005

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Master_Log_File: mysql-bin.000005

Read_Master_Log_Pos: 651

Relay_Master_Log_File: mysql-bin.000005

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Master_Log_File: mysql-bin.000005

Read_Master_Log_Pos: 651

Relay_Master_Log_File: mysql-bin.000005

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

So, replication is running. We can try our take-over script, which the cookbook provides:

$ cookbook/take-over

./tools/tungsten-installer

--master-slave

--master-host=host1

--datasource-user=tungsten

--datasource-password=secret

--datasource-port=3306

--service-name=cookbook

--home-directory=/home/tungsten/installs/cookbook

--cluster-hosts=host1,host2,host3,host4

--datasource-mysql-conf=/etc/my.cnf

--datasource-log-directory=/var/lib/mysql

--rmi-port=10000

--thl-port=2112 -a

--auto-enable=false

--start

$TUNGSTEN_BASE/tungsten/tungsten-replicator/bin/trepctl -port 10000 -host host1 online -from-event mysql-bin.000005:651

$TUNGSTEN_BASE/tungsten/tungsten-replicator/bin/trepctl -port 10000 -host host2 online

$TUNGSTEN_BASE/tungsten/tungsten-replicator/bin/trepctl -port 10000 -host host3 online

$TUNGSTEN_BASE/tungsten/tungsten-replicator/bin/trepctl -port 10000 -host host4 online

--------------------------------------------------------------------------------------

Topology: 'MASTER_SLAVE'

--------------------------------------------------------------------------------------

# node host1

cookbook [master] seqno: 5 - latency: 0.556 - ONLINE

# node host2

cookbook [slave] seqno: 5 - latency: 0.663 - ONLINE

# node host3

cookbook [slave] seqno: 5 - latency: 0.690 - ONLINE

# node host4

cookbook [slave] seqno: 5 - latency: 0.595 - ONLINE

What happens here?

The first notable thing is that we install the replicator with the option --auto-enable set to false. With this option, the replicator starts, but stays OFFLINE. After that, the take-over script stops the replication in all servers, retrieves the latest binlog position, and tells the replicator to go ONLINE using the event ID. This is one of the few cases where we can't use a global transaction ID, because it does not exist yet!

Next, all the slaves go online. There is no need to tell them at which event they should start, because they will simply get the events from the master.

Further info:

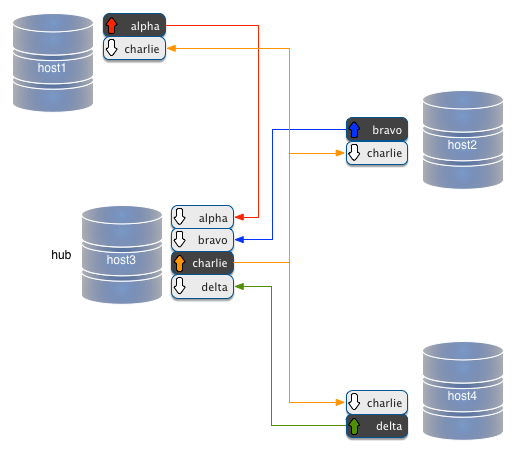

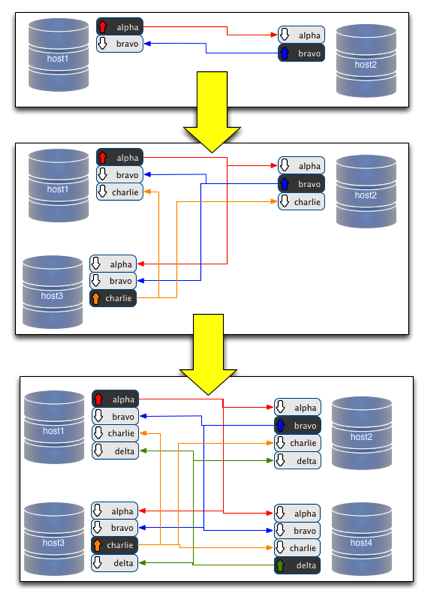

Figure 1 - Hierarchical replication depth of processing

Figure 1 - Hierarchical replication depth of processing