What is a conflict?

Readers of this blog know that one of my favorite tools, Tungsten Replicator, can easily create multi-master replication topologies, such as all-masters, star, fan-in. While this is good news for system designers and ambitious DBAs, it also brings some inconvenience. When you allow updates to happen in more than one master, you risk having conflicts. You may have heard this term before. For the sake of clarity, let's define what conflicts are, before analyzing each case in detail.

You have a conflict when several sources (masters) update concurrently the same data in asynchronous replication.

It's important to stress that this happens with asynchronous replication. In a truly synchronous cluster, where all data is kept consistent through 2-phase commit, there is no risk of conflicts.

The above definition is not always correct. You may update data from several sources and end up with something completely legitimate. A better definition should be: a conflict happens after an unexpected concurrent modification of existing data coming from a remote source.

For example:

- Both servers A and B insert a record with the same primary key;

- Master A updates record 10 with value 3 while master B updates record 10 with value 4;

- Master A deletes record 10 while master B updates record 10.

In each of these cases, there is data in one server, which could have been just inserted by a legitimate source, or could have been there for long time. Regardless of the record age, the conflict happen when the data clashes with the latest update. We usually think of conflicts as concurrent events from different sources, because this is the most frequent case of evident conflicts, i.e. conflicts that are detected (and hopefully fixed). But there are hidden conflict that happen (or are discovered) long time after the update.

When we consider the consequences of a conflict, we observe that a conflict creates one or more of the following:

- Duplicate data (unwanted insert)

- Data inconsistency (unwanted update)

- Data loss (unwanted delete)

- Replication break

Duplicate data

This is the most widely known conflict, because it's often caught immediately after the fact, allowing the DBA to take action. However, it's also one of the less understood cases, as we will see in the next section.

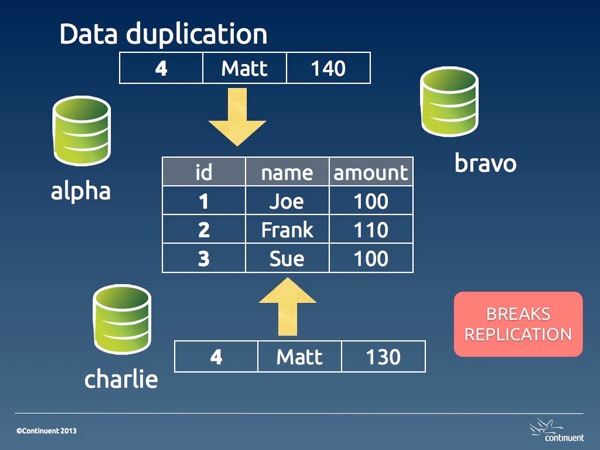

Image #1. Data duplication

In its basic shape, data duplication happens when two masters insert the same data. One of the two transactions will be committed before the other, and the second one will fail with a rightful duplicate key error. The reason for this occurrence is often an application bug, or a design flaw, or sometimes an human error that the application fails to detect properly (a different application bug).

We will see the possible solution in part 2 of this article. For now, it's enough to know that this kind of error is the best kind of conflicts that can happen to you. Since it breaks replication, it has the positive effect of alerting the DBA about an error.

Depending on which event is applied first, you have different situations. If the remote event is applied first, you have a real conflict, where the legitimate event can't get to the database. This state requires a longer set of actions: you need to clean up both the origin and the destination servers. If the legitimate action is applied first, then you don't have a real conflict, as the wrong event was applied only in one server, and you need to clean up only the origin. If you have more than two masters in your topology, you may find that the damage could be a mix of both cases, as the wrong event may arrive to distant servers before or after the right one.

auto_increment_offsets and hidden data duplication

There is a popular belief that conflicts with multi-master topologies can be avoided using auto_increment_increment and auto_increment_offset. The combination of these two variables makes sure that auto_increment values are generated with different intervals for each master. For example, if I have three masters and I am using increment 3, the first master will generate 1,4,7,10, the second one will have 2,5,8,11, and the third one 3,6,9,12.

Where does this paradigm work? When the primary key is the auto generated column, then the conflict is prevented. For example, in a table that records bug reports, the incrementing value for the bug number is a legitimate primary key. If two masters enter a bug simultaneously, the bug numbers will have different values. (there will most likely be gaps in the bug numbers, and this could be a non desirable side effect, bt then, I am not advocating this system, although I made this mistake many years ago.)

However, if the table has a natural primary key that is not an auto-incremented value, conflicts are possible, and likely. In that case, you will have a duplicated key error, as in the case seen before.

Disaster strikes when the table has a poorly chosen auto_increment primary key.

For example, let's consider a departments table with this structure:

CREATE TABLE departments (

dept_id int not null auto_increment primary key,

dept_name varchar(30) not null

);

If two masters need to insert a new department named 'special ops', we may end up with this situation:

select * from departments; +---------+-------------+ | dept_id | dept_name | +---------+-------------+ | 2 | special ops | | 4 | special ops | +---------+-------------+

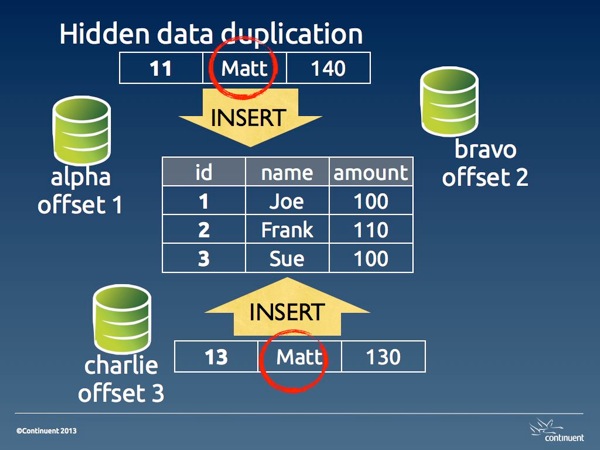

Image #2. Hidden data duplication

This is what I define hidden data duplication, because you have duplicated data, and no errors that may warn you of the problem. Here the issue is aggravated by the fact that 'department' is likely a lookup table. Thus, there will be a table where 'special ops' is referenced using dept_id 2, and another table where it is used with dept_id 4.

The reason for hidden data duplication is poor choice of primary key, and failure to enforce unique values in columns that should be such.

Data inconsistency

When two UPDATE statements are executed on the same record from different sources, there is the possibility of spoiling the data accuracy in several ways. The amount of damage depend on the type of update (with absolute values or calculated ones) and on whether we are using statement-based or row-based replication.

With absolute values, the last value inserted overwrites the previous one.

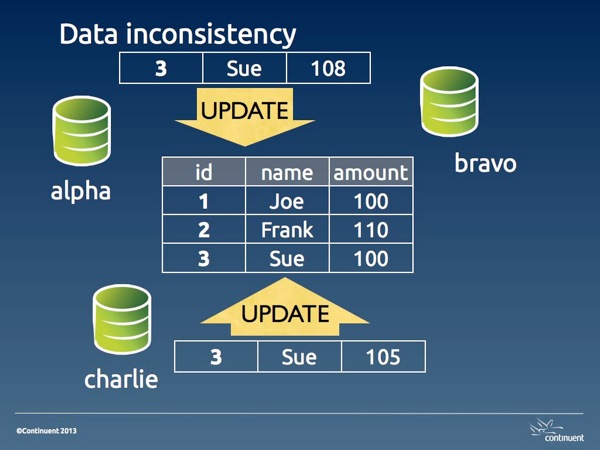

Image #3. Data inconsistency

With calculated values, the data inconsistency may change with surprising consequences. For example, if we have a table accounts:

select * from accounts; +----+--------+ | id | amount | +----+--------+ | 1 | 1000 | | 2 | 1000 | | 3 | 1000 | +----+--------+

If a statement that doubles the account for ID 2 is executed in two masters, then we will have an amount of 4,000 instead of 2,000. Using row-base replication can protect you against this kind of disaster.

Data loss

When a DELETE statement is entered for a record that later we want to read, we have lost data. This kind of DELETEs may happen because of bad operations, or more likely because of data inconsistencies that alter the conditions used for deleting.

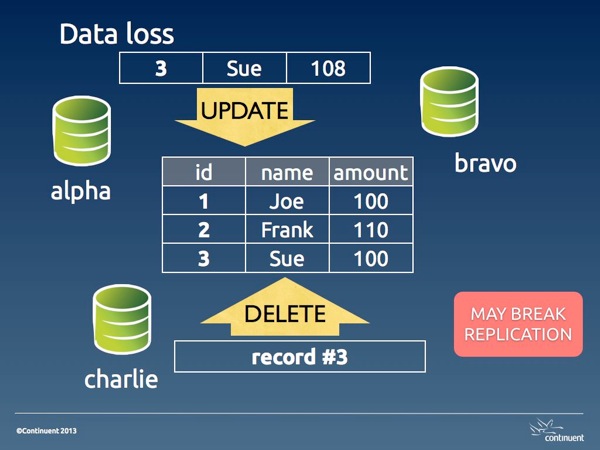

Image #4. Data loss

Unwanted DELETE operations may also break replication when the DELETE happens before an UPDATE on the same record. Either way, a data loss conflict is hard to resolve because the data has gone away. Depending on the amount of the loss, we may need to restore the table completely or partially from a backup.

Why conflicts happen

To understand why conflicts happen, let's first see why they don't happen when we try a conflicting operation in the same server.

SESSION_1 > create table people (id int not null primary key, name varchar(40) not null, amount int); Query OK, 0 rows affected (0.01 sec) SESSION_1 > insert into people values (1, 'Joe', 100), (2, 'Frank', 110), (3, 'Sue', 100); Query OK, 3 rows affected (0.00 sec) Records: 3 Duplicates: 0 Warnings: 0 SESSION_1 > set autocommit=0; Query OK, 0 rows affected (0.00 sec) SESSION_1 > begin; Query OK, 0 rows affected (0.00 sec) SESSION_2 > set autocommit=0; Query OK, 0 rows affected (0.00 sec) SESSION_2 > select * from people; +----+-------+--------+ | id | name | amount | +----+-------+--------+ | 1 | Joe | 100 | | 2 | Frank | 110 | | 3 | Sue | 100 | +----+-------+--------+ 3 rows in set (0.00 sec) SESSION_2 > begin; Query OK, 0 rows affected (0.00 sec) SESSION_1 > insert into people values (4,'Matt', 140); Query OK, 1 row affected (0.00 sec) SESSION_2 > insert into people values (4,'Matt', 130); # ... hanging SESSION_1 > commit; Query OK, 0 rows affected (0.01 sec) SESSION_2 > insert into people values (4,'Matt', 130); # ... ERROR 1062 (23000): Duplicate entry '4' for key 'PRIMARY'

What happens here is that user in session 1 inserts a record at the same time when user in session 2 inserts the same record. When the record is inserted in session 1, InnoDB creates a lock. If you look at the InnoDB locks before SESSION_1 issues a commit, you will see it:

SESSION_3 > select * from information_schema.innodb_locks\G

*************************** 1. row ***************************

lock_id: 30B:0:307:5

lock_trx_id: 30B

lock_mode: S

lock_type: RECORD

lock_table: `test`.`people`

lock_index: `PRIMARY`

lock_space: 0

lock_page: 307

lock_rec: 5

lock_data: 4

*************************** 2. row ***************************

lock_id: 30C:0:307:5

lock_trx_id: 30C

lock_mode: X

lock_type: RECORD

lock_table: `test`.`people`

lock_index: `PRIMARY`

lock_space: 0

lock_page: 307

lock_rec: 5

lock_data: 4

2 rows in set (0.00 sec)You can see that there is an exclusive lock on the record.

This lock effectively prevents a duplicate.

Now, if you imagine the two sessions happening on different servers, the two users are in a similar situation, i.e. they don't know that a concurrent update on the same record is being attempted. But the difference is that, in asynchronous replication, there is no lock applied on a remote server. If the two transactions are committed at the same instant, both of them will be stored in their local server, and both of them will fail and break replication on the remote server. If the record in session 1 is applied a few seconds before the other, the user in session 2 will not be able to commit, same as it happened with the concurrent insertion in the single server example above. In this case, the conflict looks exactly as it happened in a single server.

However, if both commits happen at the same time, both users will have a positive feedback, since their transaction will return success, and both are happy, at least temporarily. Unbeknown to both, though, their transaction has failed on the remote server, and replication is broken on both servers, leaving each with a bit of mess to clean up.

These examples show that conflicts are often a matter of chance. Depending on the timing of the operations, we might catch them as they happen and take action before the conflict spreads its side effects, or we only notice later on, when replication fails, and the conflict has already spoiled our data.

Summing up

Conflicts in multi-master topologies are the consequence of unwanted or unexpected operations. The effects of a conflict range from data inconsistency to data loss, and may also cause replication to break.

The most desirable outcome for a conflict is a replication error, because it prevents further spreading of the error and alerts the DBA about a possible issue.

In the second part of this article, we will look at some of the methods to deal with conflicts in various scenarios.

No comments:

Post a Comment