Previous episodes:

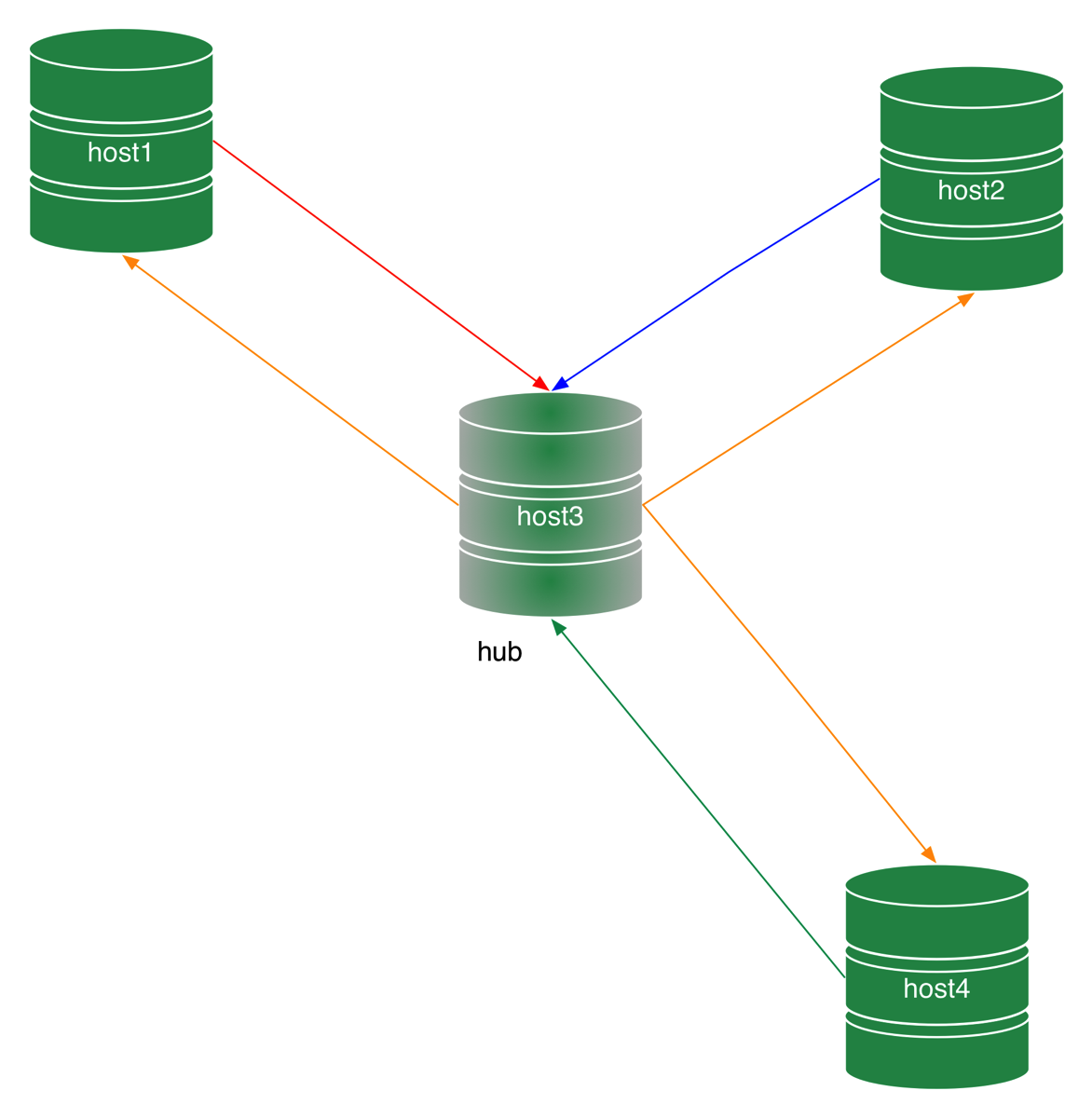

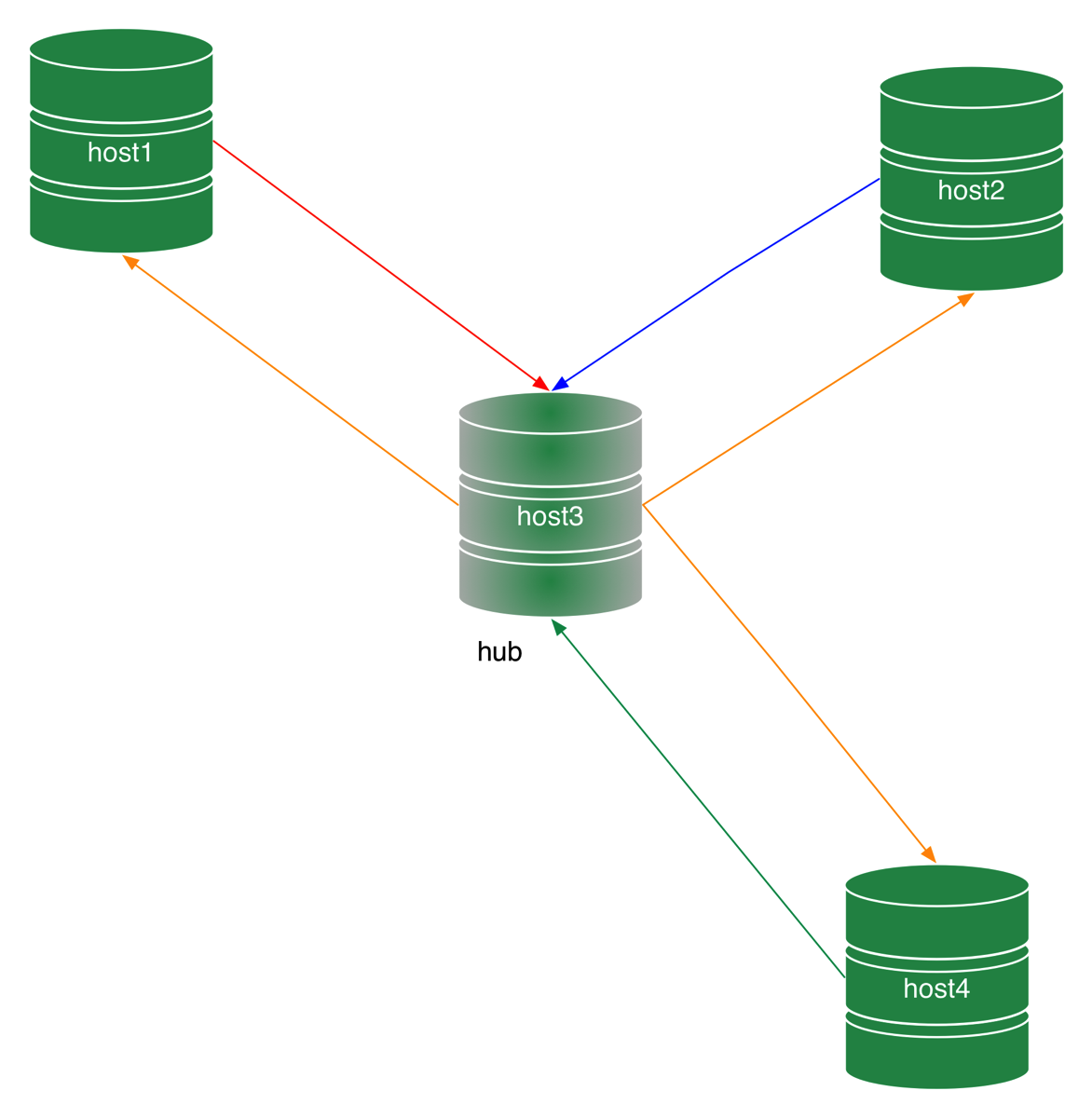

We can achieve the same result as in a P2P all-masters topology by trading connections for stability. In a star topology (Figure 1) all nodes are masters, but they do not connect to each other directly. There is a special node, named hub, which receives the changes produced by each endpoint and spreads them to the others.

Figure 1 - A star topology

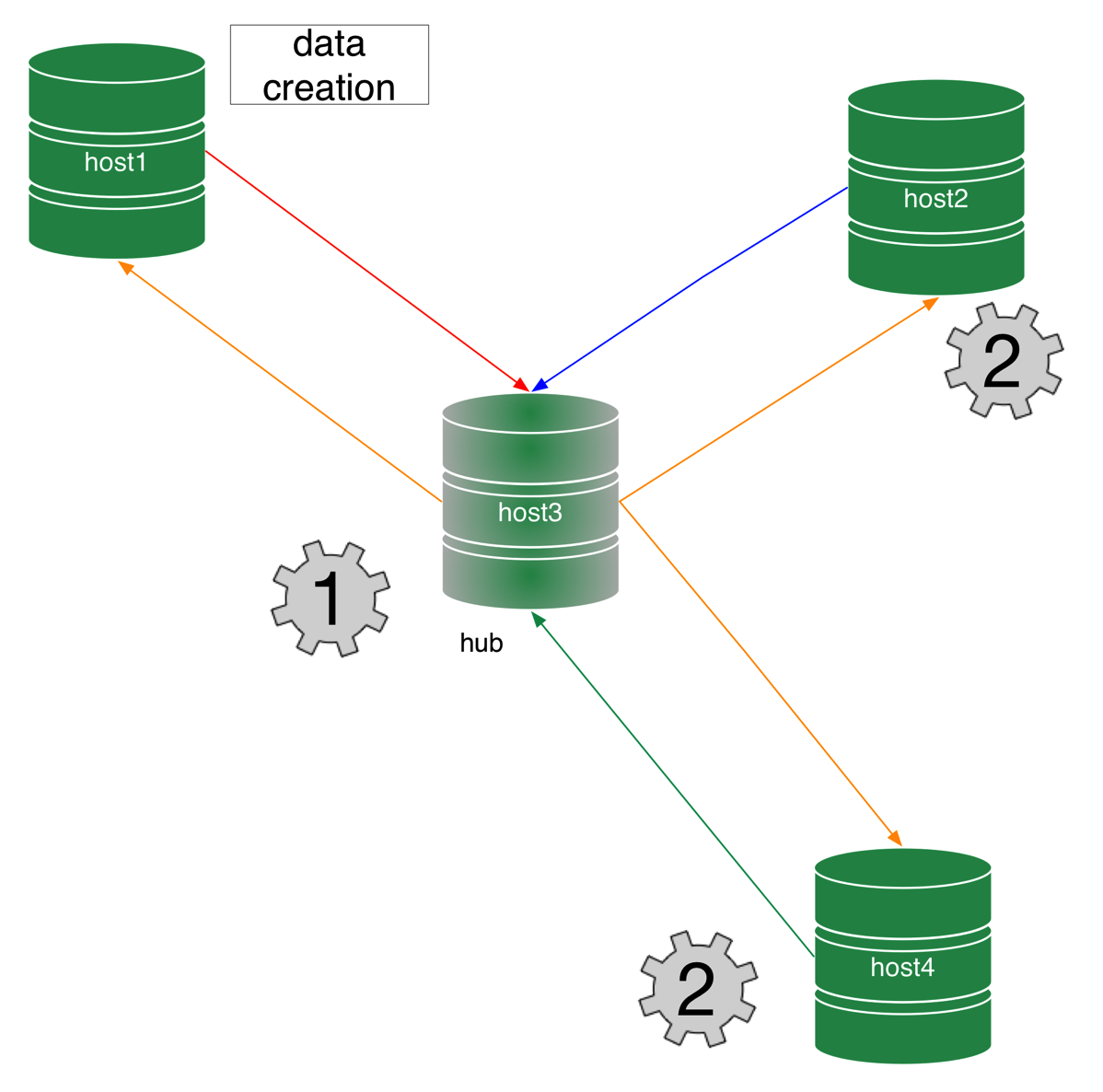

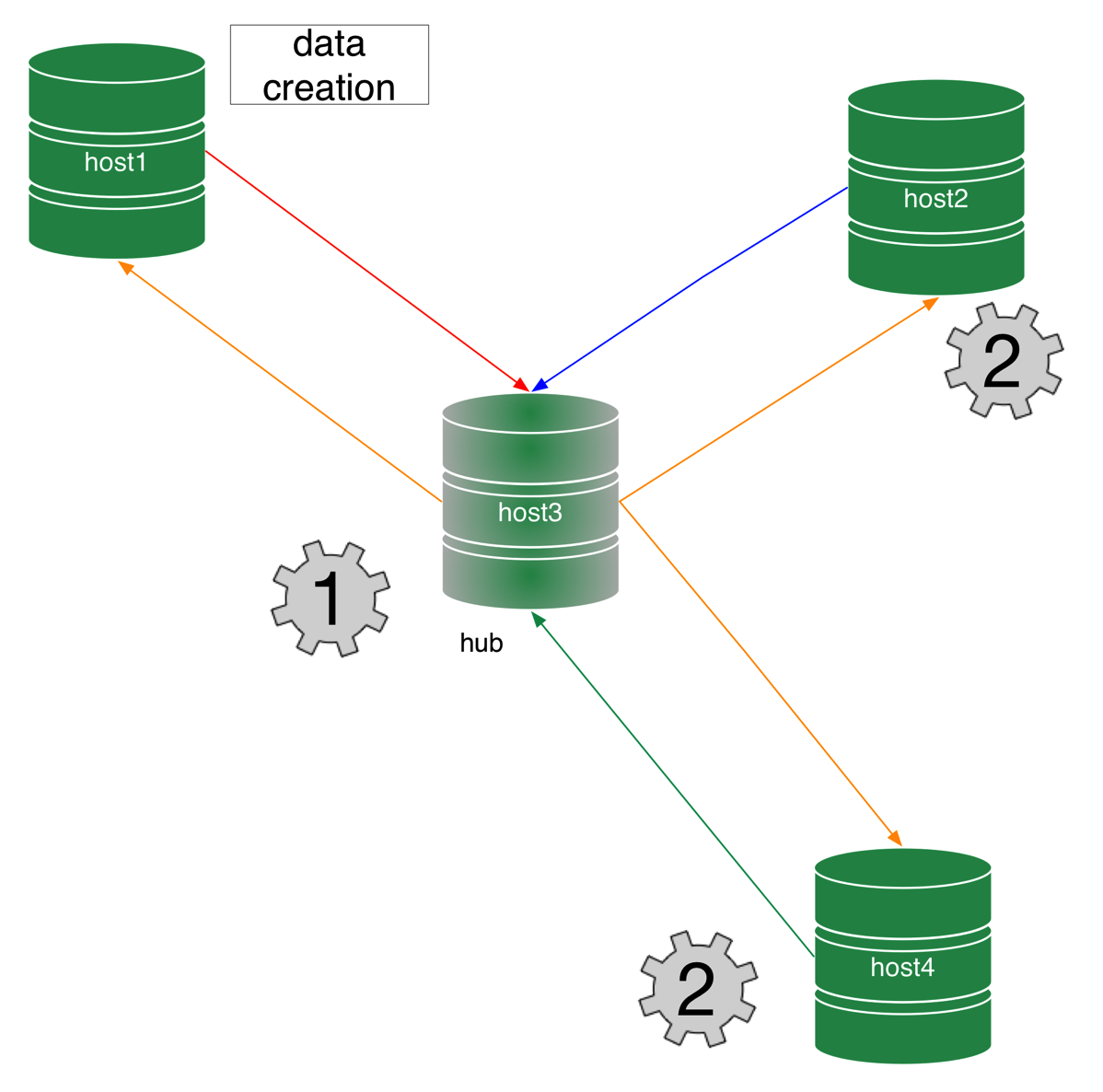

Compared to ring replication, a star topology does not achieve the dangerous depth of processing (see Part 3) of N-1, where the last node requires N-1 acts of replication to be updated. In fact, the depth of processing of a star topology can be either 1, if the data is produced in the hub, or 2, if it is produced in the endpoints (See figures 2 and 3).

Figure 2 - A star topology depth of processing from hub

Figure 3 - A star topology depth of processing from endpoints

You can think of a star topology as a set of ring topologies strung together.

Figure 4 - A star topology is like many rings together

The way it works is simple. All endpoint nodes are configured as we have seen for fan-in and P2P topologies. The hub node, instead, has an extra option: it enables log-slave-updates. With this addition, the hub is able to receive data from any master, and every endpoint can then pull the data from the hub.

The advantage is clear: While we had 12 connections for a 4 node deployment in an all-masters P2P topology, a star topology only requires 6 of them. To add a 5th node, you needed to add 8 connections (4 in the new node, and one each in the previous nodes) for a total of 20 connections. In a star topology, you would only add 2 connections (one from the new endpoint to the hub, and one from the hub to the new endpoint) for a total of 8.

If your deployment requires many nodes and all need to be masters, a star topology is a good candidate.

There is, of course, a price to pay. In addition to the risk of bad performance (of which I have talked at length in Multiple masters : attraction to the stars,) a star topology has a single point of failure (SPOF) and you must get ready to deal with it. We will see a full example, but for now you need to know that, in order to successfully promote an endpoint to the role of hub, you need to enable log-slave-updates in one or more endpoints that you want to be hub-candidates.

You must also remember to enable log-slave-updates in the hub (and in one or more endpoints that you want to set as candidate to hub replacement in case of failure.

The procedure for MariaDB 10 is the same, with the different syntax that we have noted in the previous articles.

To see a practical example, we will use another script from mysql-replication-samples. Using a group of 5 sandboxes, we will create a system with 4 endpoint and one hub.

After that is done, we can test the deployment:

To show what the monitoring looks like in a star topology, we could run a full SHOW SLAVE STATUS for each node, but that would take too much space. Basically, you will see the same output that we have seen for all-masters, with a big difference: endpoints have only one channel, while the hub has 4. Let's see just a simplified account:

With the hub gone, we will see that the endpoints are not updated:

The ingredients to create your own customized deployments are the following:

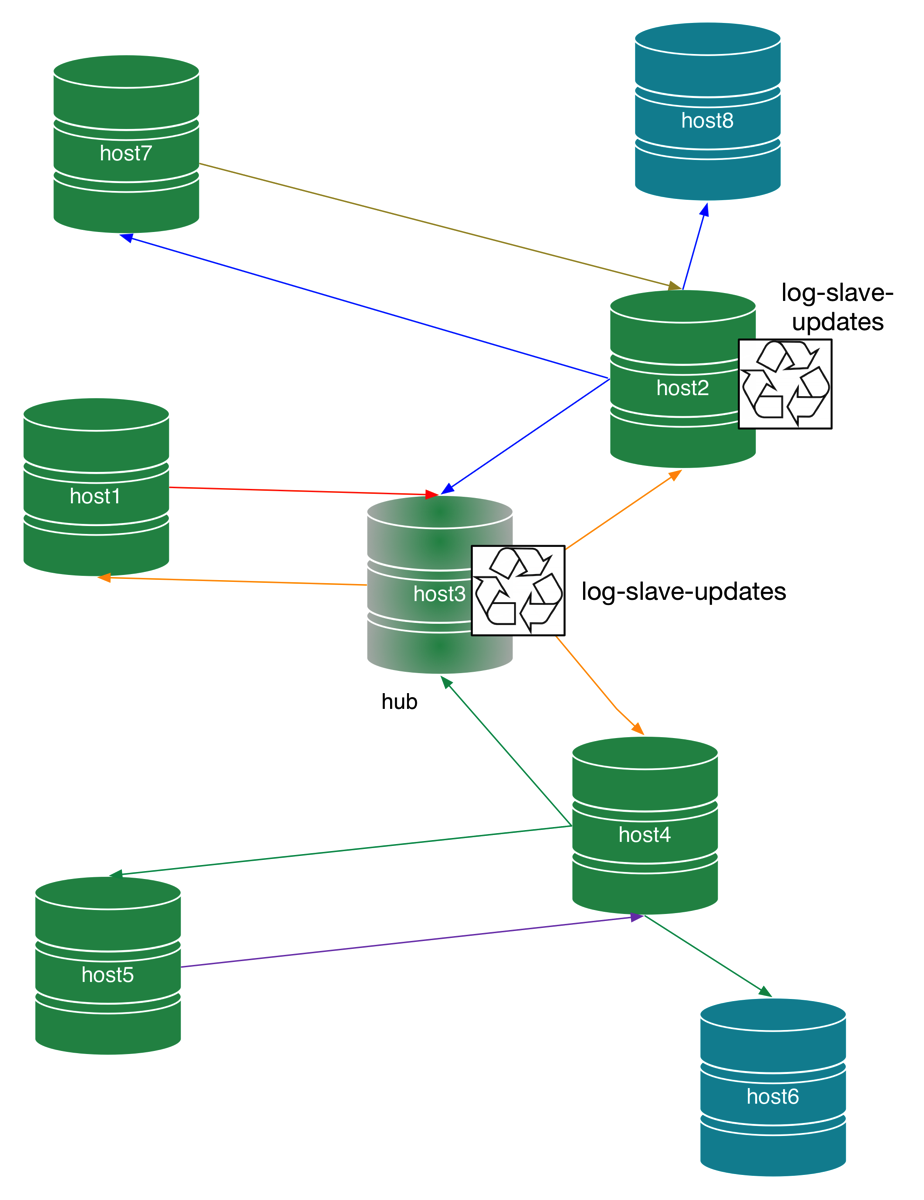

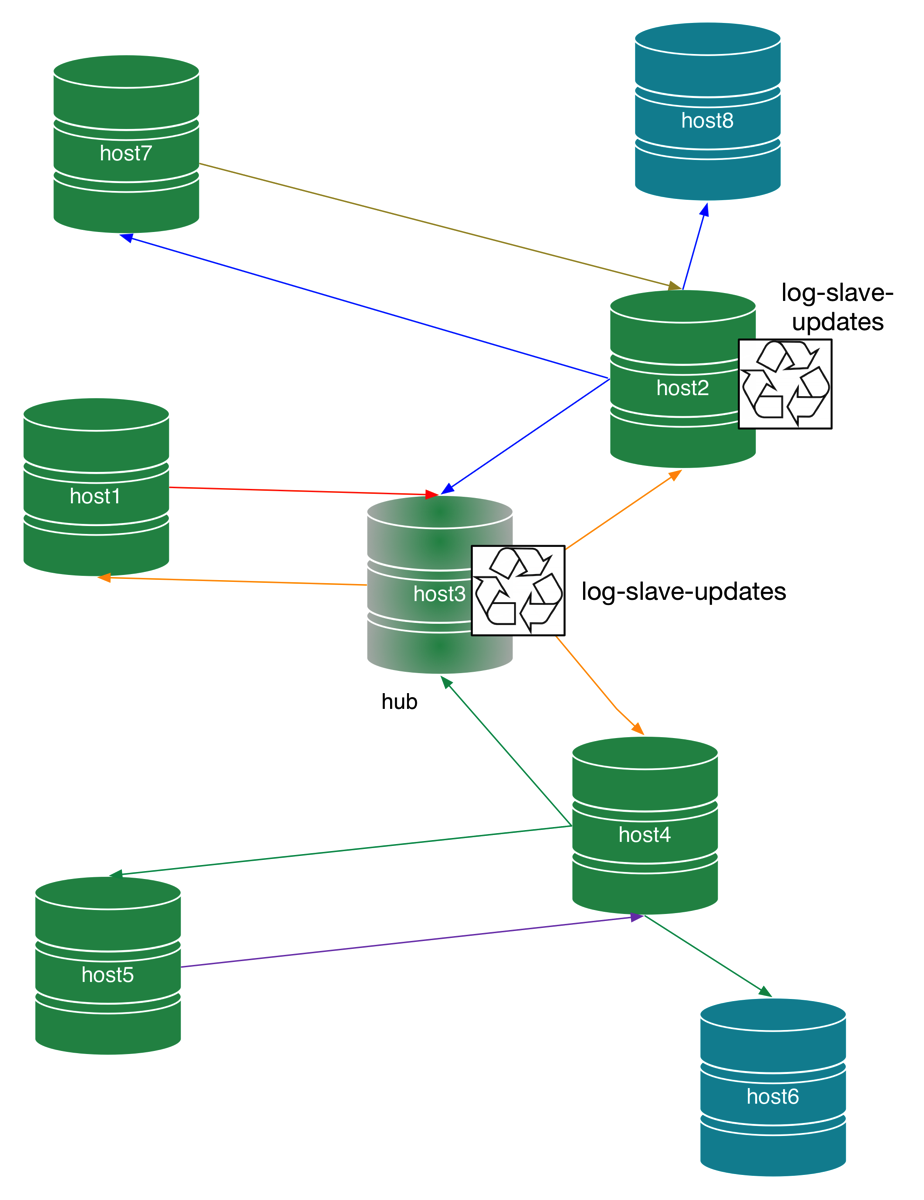

Figure 5 - A hybrid topology including 1 star, 2 P2P, and 2 simple slaves

In Figure 5, node #4 is connected with node #5 in P2P all-masters. Neither node has log-slave-updated enabled. From node #4 we also have a simple slave (node #6).

We have a mirror deployment with nodes #2, #7, and #8, but this time the connection node has log-slave-updated enabled.

If you want to try this at home, you can use a proof-of-concept from mysql-replication-samples. Here is the relevant chunk from that script:

Conversely, in node #7 and #8 we get everything from every master, except from node #5, because it lacks the pass-through of log-slave-update in node #4, its connection to the cluster.

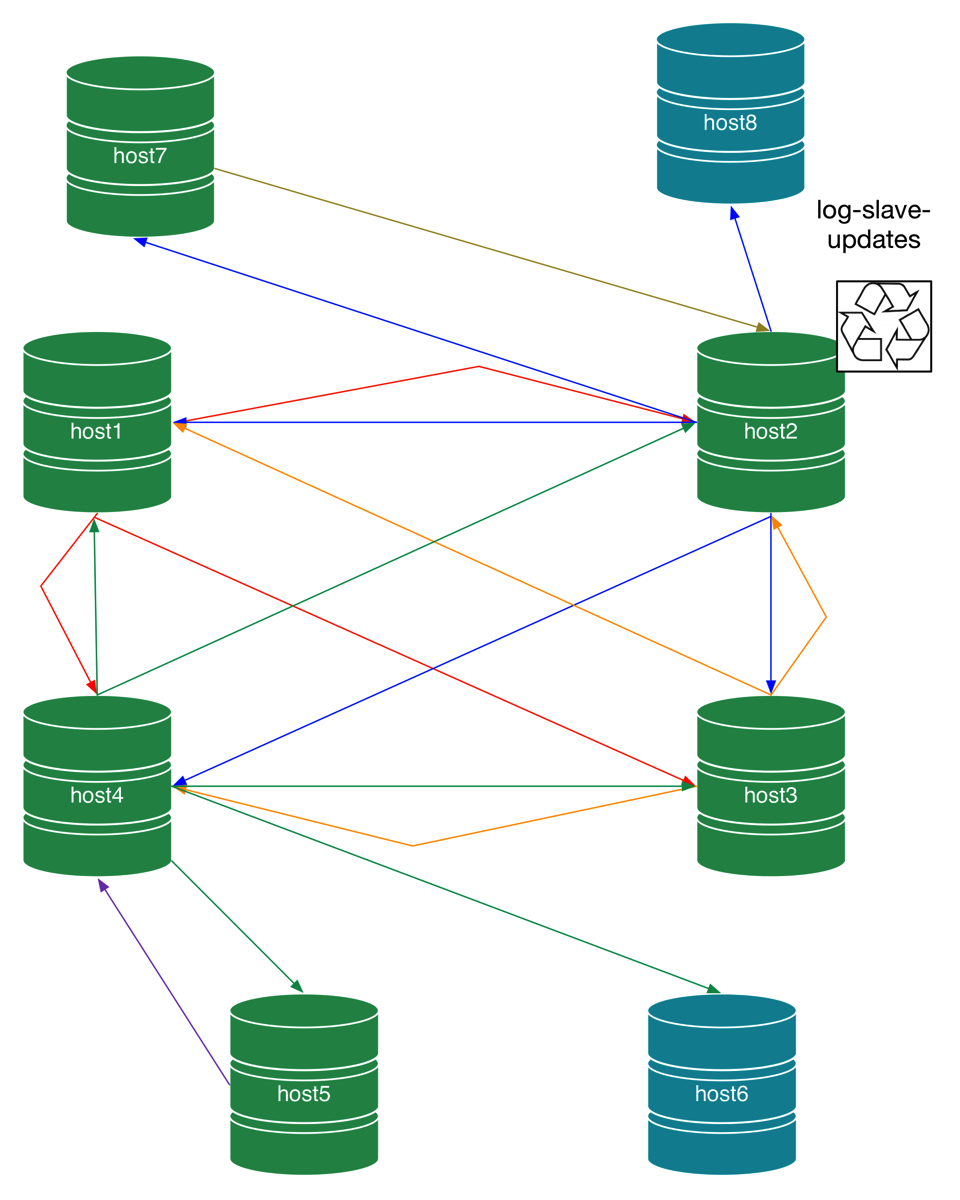

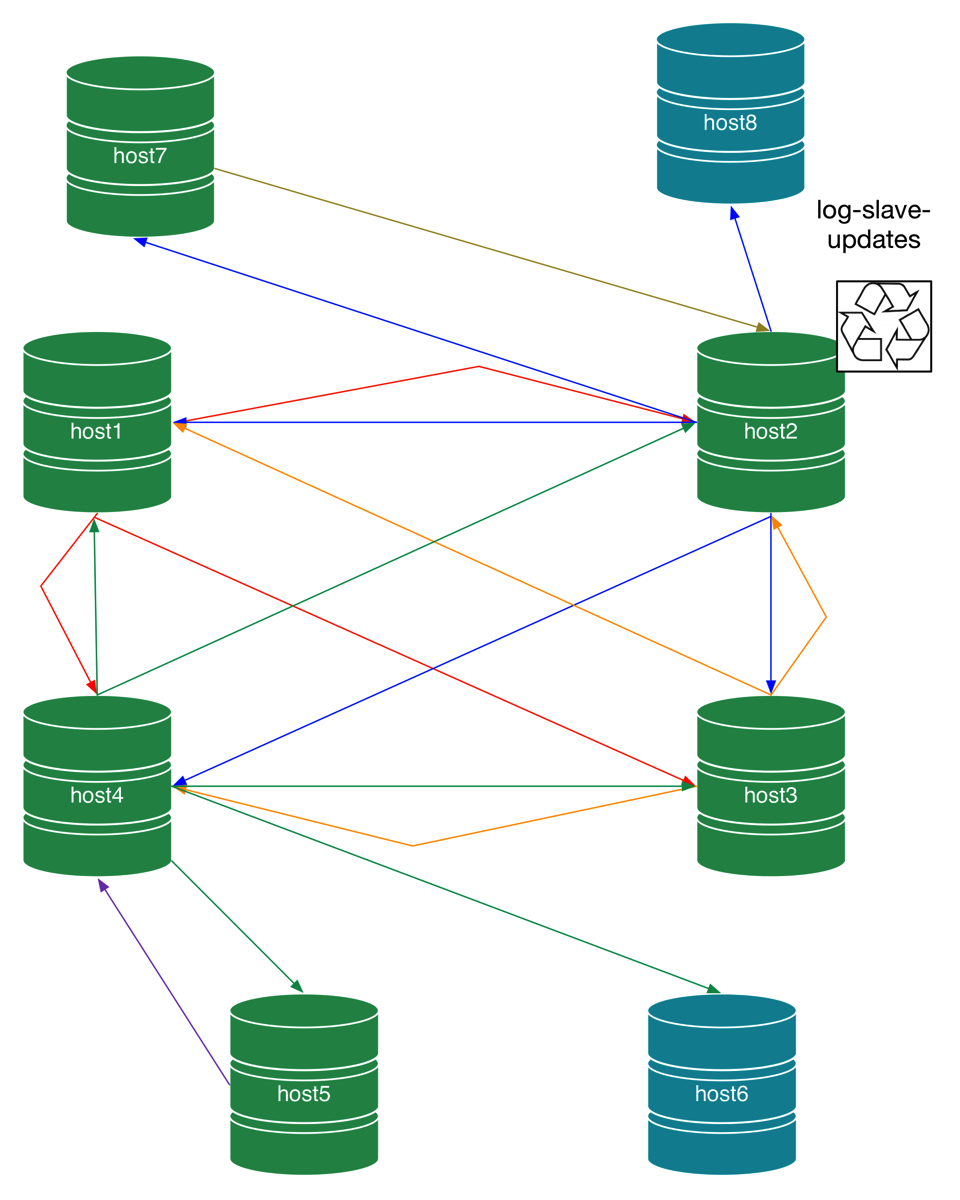

Figure 6 - A hybrid topology including 3 P2P, and 2 simple slaves

In a similar way, we can extend a all-masters P2P topology to have 2 more nodes in master-to-master replication with one node (Figure 6). The outcome of this deployment will be the same seen for Figure 5, with the difference that this topology requires more connections.

Figure 7 - A hybrid topology including 2 P2P clusters joined by a node.

Using the same principle, we can join two clusters by enabling log-slave-updates in one of their nodes and putting these enhanced nodes in P2P replication. In Figure 7 you see how you may join two all-masters P2P topologies.

Figure 8 - A hybrid topology including 2 star clusters joined by their hubs.

Joining two star topologies does not require any extra operations except running CHANGE MASTER TO, because the hubs are already enabled for dispatching events.

Figure 9 - A hybrid topology including 1 P2P cluster and a star cluster joined by a node.

Finally, you can join two different topologies by linking one node from each, like in figure 9.

Setting up a star topology makes us understand the building blocks of complex topologies. Armed with this knowledge, we can create hybrid topologies that fit our needs.

- MySQL replication monitoring 101

- MySQL replication in action - Part 1: GTID & Co

- MySQL replication in action - Part 2 - Fan-in topology

- MySQL replication in action - Part 3 - All-masters P2P topology

Introducing star topology.

In all-masters P2P topologies, we have seen that we have a way of deploying a topology where all nodes are masters, and achieve better efficiency and stability than ring topologies. That method comes at the price of a complex setup, which requires, for a N-node cluster, N*(N-1) connections.We can achieve the same result as in a P2P all-masters topology by trading connections for stability. In a star topology (Figure 1) all nodes are masters, but they do not connect to each other directly. There is a special node, named hub, which receives the changes produced by each endpoint and spreads them to the others.

Figure 1 - A star topology

Compared to ring replication, a star topology does not achieve the dangerous depth of processing (see Part 3) of N-1, where the last node requires N-1 acts of replication to be updated. In fact, the depth of processing of a star topology can be either 1, if the data is produced in the hub, or 2, if it is produced in the endpoints (See figures 2 and 3).

Figure 2 - A star topology depth of processing from hub

Figure 3 - A star topology depth of processing from endpoints

You can think of a star topology as a set of ring topologies strung together.

Figure 4 - A star topology is like many rings together

The way it works is simple. All endpoint nodes are configured as we have seen for fan-in and P2P topologies. The hub node, instead, has an extra option: it enables log-slave-updates. With this addition, the hub is able to receive data from any master, and every endpoint can then pull the data from the hub.

The advantage is clear: While we had 12 connections for a 4 node deployment in an all-masters P2P topology, a star topology only requires 6 of them. To add a 5th node, you needed to add 8 connections (4 in the new node, and one each in the previous nodes) for a total of 20 connections. In a star topology, you would only add 2 connections (one from the new endpoint to the hub, and one from the hub to the new endpoint) for a total of 8.

If your deployment requires many nodes and all need to be masters, a star topology is a good candidate.

There is, of course, a price to pay. In addition to the risk of bad performance (of which I have talked at length in Multiple masters : attraction to the stars,) a star topology has a single point of failure (SPOF) and you must get ready to deal with it. We will see a full example, but for now you need to know that, in order to successfully promote an endpoint to the role of hub, you need to enable log-slave-updates in one or more endpoints that you want to be hub-candidates.

Installing a star topology

The procedure to install a star topology in MySQL 5.7 requires several steps for each endpoint:- in the endpoint:

- create a channel named hub-to-$nodename, where $nodename is the name of the endpoint;

- start slave for channel 'hub-to-$nodename'

- in the hub:

- create a channel named $nodename-to-hub

- start slave for channel '$nodename-to-hub'

You must also remember to enable log-slave-updates in the hub (and in one or more endpoints that you want to set as candidate to hub replacement in case of failure.

The procedure for MariaDB 10 is the same, with the different syntax that we have noted in the previous articles.

To see a practical example, we will use another script from mysql-replication-samples. Using a group of 5 sandboxes, we will create a system with 4 endpoint and one hub.

$ ./set_star_topology.sh 5.7.8 mysql

installing node 1

installing node 2

installing node 3

installing node 4

installing node 5

group directory installed in $HOME/sandboxes/multi_msb_5_7_8

# server: 1:

# server: 2:

# server: 3:

# server: 4:

# server: 5:

# option 'master-info-repository=table' added to node1 configuration file

# option 'relay-log-info-repository=table' added to node1 configuration file

# option 'gtid_mode=ON' added to node1 configuration file

# option 'enforce-gtid-consistency' added to node1 configuration file

. sandbox server started

# option 'master-info-repository=table' added to node2 configuration file

# option 'relay-log-info-repository=table' added to node2 configuration file

# option 'gtid_mode=ON' added to node2 configuration file

# option 'enforce-gtid-consistency' added to node2 configuration file

. sandbox server started

# option 'master-info-repository=table' added to node3 configuration file

# option 'relay-log-info-repository=table' added to node3 configuration file

# option 'gtid_mode=ON' added to node3 configuration file

# option 'enforce-gtid-consistency' added to node3 configuration file

# option 'log-slave-updates' added to node3 configuration file

. sandbox server started

# option 'master-info-repository=table' added to node4 configuration file

# option 'relay-log-info-repository=table' added to node4 configuration file

# option 'gtid_mode=ON' added to node4 configuration file

# option 'enforce-gtid-consistency' added to node4 configuration file

. sandbox server started

# option 'master-info-repository=table' added to node5 configuration file

# option 'relay-log-info-repository=table' added to node5 configuration file

# option 'gtid_mode=ON' added to node5 configuration file

# option 'enforce-gtid-consistency' added to node5 configuration file

. sandbox server started

# HUB node3 port: 8381

# node node1 port: 8379

./node1/use -e "CHANGE MASTER TO master_host='127.0.0.1', master_user='rsandbox', master_password='rsandbox', master_port=8381, MASTER_AUTO_POSITION=1 for channel 'hub_node1'"

./node3/use -e "CHANGE MASTER TO master_host='127.0.0.1', master_user='rsandbox', master_password='rsandbox', master_port=8379, MASTER_AUTO_POSITION=1 for channel 'node1_hub'"

./node3/use -e "START SLAVE FOR CHANNEL 'node1_hub'"

./node1/use -e "START SLAVE FOR CHANNEL 'hub_node1'"

# node node2 port: 8380

./node2/use -e "CHANGE MASTER TO master_host='127.0.0.1', master_user='rsandbox', master_password='rsandbox', master_port=8381, MASTER_AUTO_POSITION=1 for channel 'hub_node2'"

./node3/use -e "CHANGE MASTER TO master_host='127.0.0.1', master_user='rsandbox', master_password='rsandbox', master_port=8380, MASTER_AUTO_POSITION=1 for channel 'node2_hub'"

./node3/use -e "START SLAVE FOR CHANNEL 'node2_hub'"

./node2/use -e "START SLAVE FOR CHANNEL 'hub_node2'"

# node node4 port: 8382

./node4/use -e "CHANGE MASTER TO master_host='127.0.0.1', master_user='rsandbox', master_password='rsandbox', master_port=8381, MASTER_AUTO_POSITION=1 for channel 'hub_node4'"

./node3/use -e "CHANGE MASTER TO master_host='127.0.0.1', master_user='rsandbox', master_password='rsandbox', master_port=8382, MASTER_AUTO_POSITION=1 for channel 'node4_hub'"

./node3/use -e "START SLAVE FOR CHANNEL 'node4_hub'"

./node4/use -e "START SLAVE FOR CHANNEL 'hub_node4'"

# node node5 port: 8383

./node5/use -e "CHANGE MASTER TO master_host='127.0.0.1', master_user='rsandbox', master_password='rsandbox', master_port=8381, MASTER_AUTO_POSITION=1 for channel 'hub_node5'"

./node3/use -e "CHANGE MASTER TO master_host='127.0.0.1', master_user='rsandbox', master_password='rsandbox', master_port=8383, MASTER_AUTO_POSITION=1 for channel 'node5_hub'"

./node3/use -e "START SLAVE FOR CHANNEL 'node5_hub'"

./node5/use -e "START SLAVE FOR CHANNEL 'hub_node5'"

$ ./node4/add_option log-slave-updates

# option 'log-slave-updates' added to configuration file

. sandbox server started

After that is done, we can test the deployment:

$ ./test_all_masters_replication.sh

# NODE node1 created table test_node1

# NODE node2 created table test_node2

# NODE node3 created table test_node3

# NODE node4 created table test_node4

# NODE node5 created table test_node5

# Data in all nodes

101

1 101 8379 node1 2015-08-14 22:21:02

1 102 8380 node2 2015-08-14 22:21:02

1 103 8381 node3 2015-08-14 22:21:02

1 104 8382 node4 2015-08-14 22:21:02

1 105 8383 node5 2015-08-14 22:21:02

102

1 101 8379 node1 2015-08-14 22:21:02

1 102 8380 node2 2015-08-14 22:21:02

1 103 8381 node3 2015-08-14 22:21:02

1 104 8382 node4 2015-08-14 22:21:02

1 105 8383 node5 2015-08-14 22:21:02

103

1 101 8379 node1 2015-08-14 22:21:02

1 102 8380 node2 2015-08-14 22:21:02

1 103 8381 node3 2015-08-14 22:21:02

1 104 8382 node4 2015-08-14 22:21:02

1 105 8383 node5 2015-08-14 22:21:02

104

1 101 8379 node1 2015-08-14 22:21:02

1 102 8380 node2 2015-08-14 22:21:02

1 103 8381 node3 2015-08-14 22:21:02

1 104 8382 node4 2015-08-14 22:21:02

1 105 8383 node5 2015-08-14 22:21:02

105

1 101 8379 node1 2015-08-14 22:21:02

1 102 8380 node2 2015-08-14 22:21:02

1 103 8381 node3 2015-08-14 22:21:02

1 104 8382 node4 2015-08-14 22:21:02

1 105 8383 node5 2015-08-14 22:21:02

$ for N in 1 2 3 4 5; do ./n$N -e 'select @@server_id, @@server_uuid; select @@global.gtid_executed\G'; done

+-------------+--------------------------------------+

| @@server_id | @@server_uuid |

+-------------+--------------------------------------+

| 101 | 519dd7f0-42c4-11e5-8995-590303071a70 |

+-------------+--------------------------------------+

*************************** 1. row ***************************

@@global.gtid_executed: 519dd7f0-42c4-11e5-8995-590303071a70:1-3,

5615010a-42c4-11e5-873f-9904485cf6bc:1-3,

5a8809f8-42c4-11e5-9c60-f36f50446736:1-3,

5ecdf2ca-42c4-11e5-b861-a22aea18b64b:1-3,

630bab66-42c4-11e5-8807-bb8c8c1ce62c:1-3

+-------------+--------------------------------------+

| @@server_id | @@server_uuid |

+-------------+--------------------------------------+

| 102 | 5615010a-42c4-11e5-873f-9904485cf6bc |

+-------------+--------------------------------------+

*************************** 1. row ***************************

@@global.gtid_executed: 519dd7f0-42c4-11e5-8995-590303071a70:1-3,

5615010a-42c4-11e5-873f-9904485cf6bc:1-3,

5a8809f8-42c4-11e5-9c60-f36f50446736:1-3,

5ecdf2ca-42c4-11e5-b861-a22aea18b64b:1-3,

630bab66-42c4-11e5-8807-bb8c8c1ce62c:1-3

+-------------+--------------------------------------+

| @@server_id | @@server_uuid |

+-------------+--------------------------------------+

| 103 | 5a8809f8-42c4-11e5-9c60-f36f50446736 |

+-------------+--------------------------------------+

*************************** 1. row ***************************

@@global.gtid_executed: 519dd7f0-42c4-11e5-8995-590303071a70:1-3,

5615010a-42c4-11e5-873f-9904485cf6bc:1-3,

5a8809f8-42c4-11e5-9c60-f36f50446736:1-3,

5ecdf2ca-42c4-11e5-b861-a22aea18b64b:1-3,

630bab66-42c4-11e5-8807-bb8c8c1ce62c:1-3

+-------------+--------------------------------------+

| @@server_id | @@server_uuid |

+-------------+--------------------------------------+

| 104 | 5ecdf2ca-42c4-11e5-b861-a22aea18b64b |

+-------------+--------------------------------------+

*************************** 1. row ***************************

@@global.gtid_executed: 519dd7f0-42c4-11e5-8995-590303071a70:1-3,

5615010a-42c4-11e5-873f-9904485cf6bc:1-3,

5a8809f8-42c4-11e5-9c60-f36f50446736:1-3,

5ecdf2ca-42c4-11e5-b861-a22aea18b64b:1-3,

630bab66-42c4-11e5-8807-bb8c8c1ce62c:1-3

+-------------+--------------------------------------+

| @@server_id | @@server_uuid |

+-------------+--------------------------------------+

| 105 | 630bab66-42c4-11e5-8807-bb8c8c1ce62c |

+-------------+--------------------------------------+

*************************** 1. row ***************************

@@global.gtid_executed: 519dd7f0-42c4-11e5-8995-590303071a70:1-3,

5615010a-42c4-11e5-873f-9904485cf6bc:1-3,

5a8809f8-42c4-11e5-9c60-f36f50446736:1-3,

5ecdf2ca-42c4-11e5-b861-a22aea18b64b:1-3,

630bab66-42c4-11e5-8807-bb8c8c1ce62c:1-3

To show what the monitoring looks like in a star topology, we could run a full SHOW SLAVE STATUS for each node, but that would take too much space. Basically, you will see the same output that we have seen for all-masters, with a big difference: endpoints have only one channel, while the hub has 4. Let's see just a simplified account:

$ ./use_all 'SHOW SLAVE STATUS\G' | grep 'server:\|Running:\|Channel_Name:'

# server: 1:

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Channel_Name: hub_node1

# server: 2:

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Channel_Name: hub_node2

# server: 3:

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Channel_Name: node1_hub

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Channel_Name: node2_hub

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Channel_Name: node4_hub

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Channel_Name: node5_hub

# server: 4:

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Channel_Name: hub_node4

# server: 5:

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Channel_Name: hub_node5

$ ./set_star_topology.sh ma10.0.20 mariadb

installing node 1

installing node 2

installing node 3

installing node 4

installing node 5

group directory installed in $HOME/sandboxes/multi_msb_ma10_0_20

# server: 1:

# server: 2:

# server: 3:

# server: 4:

# server: 5:

# option 'gtid_domain_id=1010' added to node1 configuration file

. sandbox server started

# option 'gtid_domain_id=1020' added to node2 configuration file

. sandbox server started

# option 'log-slave-updates' added to node3 configuration file

# option 'gtid_domain_id=1030' added to node3 configuration file

. sandbox server started

# option 'gtid_domain_id=1040' added to node4 configuration file

. sandbox server started

# option 'gtid_domain_id=1050' added to node5 configuration file

. sandbox server started

# HUB node3 port: 19023

# node node1 port: 19021

./node1/use -e "CHANGE MASTER 'hub_node1' TO master_host='127.0.0.1', master_user='rsandbox', master_password='rsandbox', master_port=19023, MASTER_USE_GTID=current_pos "

./node3/use -e "CHANGE MASTER 'node1_hub' TO master_host='127.0.0.1', master_user='rsandbox', master_password='rsandbox', master_port=19021, MASTER_USE_GTID=current_pos "

./node3/use -e "START SLAVE 'node1_hub' "

./node1/use -e "START SLAVE 'hub_node1' "

# node node2 port: 19022

./node2/use -e "CHANGE MASTER 'hub_node2' TO master_host='127.0.0.1', master_user='rsandbox', master_password='rsandbox', master_port=19023, MASTER_USE_GTID=current_pos "

./node3/use -e "CHANGE MASTER 'node2_hub' TO master_host='127.0.0.1', master_user='rsandbox', master_password='rsandbox', master_port=19022, MASTER_USE_GTID=current_pos "

./node3/use -e "START SLAVE 'node2_hub' "

./node2/use -e "START SLAVE 'hub_node2' "

# node node4 port: 19024

./node4/use -e "CHANGE MASTER 'hub_node4' TO master_host='127.0.0.1', master_user='rsandbox', master_password='rsandbox', master_port=19023, MASTER_USE_GTID=current_pos "

./node3/use -e "CHANGE MASTER 'node4_hub' TO master_host='127.0.0.1', master_user='rsandbox', master_password='rsandbox', master_port=19024, MASTER_USE_GTID=current_pos "

./node3/use -e "START SLAVE 'node4_hub' "

./node4/use -e "START SLAVE 'hub_node4' "

# node node5 port: 19025

./node5/use -e "CHANGE MASTER 'hub_node5' TO master_host='127.0.0.1', master_user='rsandbox', master_password='rsandbox', master_port=19023, MASTER_USE_GTID=current_pos "

./node3/use -e "CHANGE MASTER 'node5_hub' TO master_host='127.0.0.1', master_user='rsandbox', master_password='rsandbox', master_port=19025, MASTER_USE_GTID=current_pos "

./node3/use -e "START SLAVE 'node5_hub' "

./node5/use -e "START SLAVE 'hub_node5' "

$ ./use_all 'SHOW ALL SLAVES STATUS\G' | grep 'server:\|Running:\|Connection_name:'

# server: 1:

Connection_name: hub_node1

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

# server: 2:

Connection_name: hub_node2

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

# server: 3:

Connection_name: node1_hub

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Connection_name: node2_hub

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Connection_name: node4_hub

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Connection_name: node5_hub

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

# server: 4:

Connection_name: hub_node4

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

# server: 5:

Connection_name: hub_node5

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

$ for N in 1 2 3 4 5; do ./n$N -e 'select @@server_id, @@gtid_domain_id; select * from mysql.gtid_slave_pos' ; done

+-------------+------------------+

| @@server_id | @@gtid_domain_id |

+-------------+------------------+

| 101 | 1010 |

+-------------+------------------+

+-----------+--------+-----------+--------+

| domain_id | sub_id | server_id | seq_no |

+-----------+--------+-----------+--------+

| 1010 | 2 | 101 | 2 |

| 1010 | 3 | 101 | 3 |

| 1020 | 5 | 102 | 2 |

| 1020 | 6 | 102 | 3 |

| 1030 | 8 | 103 | 2 |

| 1030 | 9 | 103 | 3 |

| 1040 | 11 | 104 | 2 |

| 1040 | 12 | 104 | 3 |

| 1050 | 14 | 105 | 2 |

| 1050 | 15 | 105 | 3 |

+-----------+--------+-----------+--------+

+-------------+------------------+

| @@server_id | @@gtid_domain_id |

+-------------+------------------+

| 102 | 1020 |

+-------------+------------------+

+-----------+--------+-----------+--------+

| domain_id | sub_id | server_id | seq_no |

+-----------+--------+-----------+--------+

| 1010 | 2 | 101 | 2 |

| 1010 | 3 | 101 | 3 |

| 1020 | 5 | 102 | 2 |

| 1020 | 6 | 102 | 3 |

| 1030 | 8 | 103 | 2 |

| 1030 | 9 | 103 | 3 |

| 1040 | 11 | 104 | 2 |

| 1040 | 12 | 104 | 3 |

| 1050 | 14 | 105 | 2 |

| 1050 | 15 | 105 | 3 |

+-----------+--------+-----------+--------+

+-------------+------------------+

| @@server_id | @@gtid_domain_id |

+-------------+------------------+

| 103 | 1030 |

+-------------+------------------+

+-----------+--------+-----------+--------+

| domain_id | sub_id | server_id | seq_no |

+-----------+--------+-----------+--------+

| 1010 | 2 | 101 | 2 |

| 1010 | 3 | 101 | 3 |

| 1020 | 5 | 102 | 2 |

| 1020 | 6 | 102 | 3 |

| 1040 | 8 | 104 | 2 |

| 1040 | 9 | 104 | 3 |

| 1050 | 11 | 105 | 2 |

| 1050 | 12 | 105 | 3 |

+-----------+--------+-----------+--------+

+-------------+------------------+

| @@server_id | @@gtid_domain_id |

+-------------+------------------+

| 104 | 1040 |

+-------------+------------------+

+-----------+--------+-----------+--------+

| domain_id | sub_id | server_id | seq_no |

+-----------+--------+-----------+--------+

| 1010 | 2 | 101 | 2 |

| 1010 | 3 | 101 | 3 |

| 1020 | 5 | 102 | 2 |

| 1020 | 6 | 102 | 3 |

| 1030 | 8 | 103 | 2 |

| 1030 | 9 | 103 | 3 |

| 1040 | 11 | 104 | 2 |

| 1040 | 12 | 104 | 3 |

| 1050 | 14 | 105 | 2 |

| 1050 | 15 | 105 | 3 |

+-----------+--------+-----------+--------+

+-------------+------------------+

| @@server_id | @@gtid_domain_id |

+-------------+------------------+

| 105 | 1050 |

+-------------+------------------+

+-----------+--------+-----------+--------+

| domain_id | sub_id | server_id | seq_no |

+-----------+--------+-----------+--------+

| 1010 | 2 | 101 | 2 |

| 1010 | 3 | 101 | 3 |

| 1020 | 5 | 102 | 2 |

| 1020 | 6 | 102 | 3 |

| 1030 | 8 | 103 | 2 |

| 1030 | 9 | 103 | 3 |

| 1040 | 11 | 104 | 2 |

| 1040 | 12 | 104 | 3 |

| 1050 | 14 | 105 | 2 |

| 1050 | 15 | 105 | 3 |

+-----------+--------+-----------+--------+

Replacing the hub

Since the hub is the SPOF in a star topology, before attempting to install one, we should at least have an idea of how to replace the hub in case of failure. In our test deployment, we have a candidate to replace the hub in case of need. Let's apply some load to the system by inserting data simultaneously in all endpoints, and killing the hub while the load goes on.With the hub gone, we will see that the endpoints are not updated:

$ for N in 1 2 3 4 5; do ./n$N -e 'select @@server_id, @@server_uuid; select @@global.gtid_executed\G'; done

+-------------+--------------------------------------+

| @@server_id | @@server_uuid |

+-------------+--------------------------------------+

| 101 | 3d9dec68-42ce-11e5-86be-185460e24dd9 |

+-------------+--------------------------------------+

*************************** 1. row ***************************

@@global.gtid_executed: 3d9dec68-42ce-11e5-86be-185460e24dd9:1-1163,

41918672-42ce-11e5-ae5e-27905d218c5f:1-236,

45f1991e-42ce-11e5-a853-1f71d8e7014b:1-3,

4a72292c-42ce-11e5-9b80-e17cc877fb87:1-82,

4ef58dae-42ce-11e5-9981-28967a9fad86:1-75

+-------------+--------------------------------------+

| @@server_id | @@server_uuid |

+-------------+--------------------------------------+

| 102 | 41918672-42ce-11e5-ae5e-27905d218c5f |

+-------------+--------------------------------------+

*************************** 1. row ***************************

@@global.gtid_executed: 3d9dec68-42ce-11e5-86be-185460e24dd9:1-92,

41918672-42ce-11e5-ae5e-27905d218c5f:1-1163,

45f1991e-42ce-11e5-a853-1f71d8e7014b:1-3,

4a72292c-42ce-11e5-9b80-e17cc877fb87:1-82,

4ef58dae-42ce-11e5-9981-28967a9fad86:1-75

+-------------+--------------------------------------+

| @@server_id | @@server_uuid |

+-------------+--------------------------------------+

| 104 | 4a72292c-42ce-11e5-9b80-e17cc877fb87 |

+-------------+--------------------------------------+

*************************** 1. row ***************************

@@global.gtid_executed: 3d9dec68-42ce-11e5-86be-185460e24dd9:1-92,

41918672-42ce-11e5-ae5e-27905d218c5f:1-236,

45f1991e-42ce-11e5-a853-1f71d8e7014b:1-3,

4a72292c-42ce-11e5-9b80-e17cc877fb87:1-1163,

4ef58dae-42ce-11e5-9981-28967a9fad86:1-75

+-------------+--------------------------------------+

| @@server_id | @@server_uuid |

+-------------+--------------------------------------+

| 105 | 4ef58dae-42ce-11e5-9981-28967a9fad86 |

+-------------+--------------------------------------+

*************************** 1. row ***************************

@@global.gtid_executed: 3d9dec68-42ce-11e5-86be-185460e24dd9:1-92,

41918672-42ce-11e5-ae5e-27905d218c5f:1-236,

45f1991e-42ce-11e5-a853-1f71d8e7014b:1-3,

4a72292c-42ce-11e5-9b80-e17cc877fb87:1-82,

4ef58dae-42ce-11e5-9981-28967a9fad86:1-1163

./node4/use -e "STOP SLAVE FOR CHANNEL 'hub_node4'"

./node4/use -e "STOP SLAVE "

./node4/use -e "RESET SLAVE FOR CHANNEL 'hub_node4'"

./node4/use -e "RESET SLAVE "

# HUB node4 port: 8382

# node node1 port: 8379

./node1/use -e "STOP SLAVE FOR CHANNEL 'hub_node1'"

./node1/use -e "CHANGE MASTER TO master_host='127.0.0.1', master_user='rsandbox', master_password='rsandbox', master_port=8382, MASTER_AUTO_POSITION=1 for channel 'hub_node1'"

./node4/use -e "CHANGE MASTER TO master_host='127.0.0.1', master_user='rsandbox', master_password='rsandbox', master_port=8379, MASTER_AUTO_POSITION=1 for channel 'node1_hub'"

./node4/use -e "START SLAVE FOR CHANNEL 'node1_hub'"

./node1/use -e "START SLAVE FOR CHANNEL 'hub_node1'"

# node node2 port: 8380

./node2/use -e "STOP SLAVE FOR CHANNEL 'hub_node2'"

./node2/use -e "CHANGE MASTER TO master_host='127.0.0.1', master_user='rsandbox', master_password='rsandbox', master_port=8382, MASTER_AUTO_POSITION=1 for channel 'hub_node2'"

./node4/use -e "CHANGE MASTER TO master_host='127.0.0.1', master_user='rsandbox', master_password='rsandbox', master_port=8380, MASTER_AUTO_POSITION=1 for channel 'node2_hub'"

./node4/use -e "START SLAVE FOR CHANNEL 'node2_hub'"

./node2/use -e "START SLAVE FOR CHANNEL 'hub_node2'"

# node node5 port: 8383

./node5/use -e "STOP SLAVE FOR CHANNEL 'hub_node5'"

./node5/use -e "CHANGE MASTER TO master_host='127.0.0.1', master_user='rsandbox', master_password='rsandbox', master_port=8382, MASTER_AUTO_POSITION=1 for channel 'hub_node5'"

./node4/use -e "CHANGE MASTER TO master_host='127.0.0.1', master_user='rsandbox', master_password='rsandbox', master_port=8383, MASTER_AUTO_POSITION=1 for channel 'node5_hub'"

./node4/use -e "START SLAVE FOR CHANNEL 'node5_hub'"

./node5/use -e "START SLAVE FOR CHANNEL 'hub_node5'"

$ for N in 1 2 3 4 5; do ./n$N -e 'select @@server_id, @@server_uuid; select @@global.gtid_executed\G'; done

+-------------+--------------------------------------+

| @@server_id | @@server_uuid |

+-------------+--------------------------------------+

| 101 | 3d9dec68-42ce-11e5-86be-185460e24dd9 |

+-------------+--------------------------------------+

*************************** 1. row ***************************

@@global.gtid_executed: 3d9dec68-42ce-11e5-86be-185460e24dd9:1-1163,

41918672-42ce-11e5-ae5e-27905d218c5f:1-1163,

45f1991e-42ce-11e5-a853-1f71d8e7014b:1-3,

4a72292c-42ce-11e5-9b80-e17cc877fb87:1-1163,

4ef58dae-42ce-11e5-9981-28967a9fad86:1-1163

+-------------+--------------------------------------+

| @@server_id | @@server_uuid |

+-------------+--------------------------------------+

| 102 | 41918672-42ce-11e5-ae5e-27905d218c5f |

+-------------+--------------------------------------+

*************************** 1. row ***************************

@@global.gtid_executed: 3d9dec68-42ce-11e5-86be-185460e24dd9:1-1163,

41918672-42ce-11e5-ae5e-27905d218c5f:1-1163,

45f1991e-42ce-11e5-a853-1f71d8e7014b:1-3,

4a72292c-42ce-11e5-9b80-e17cc877fb87:1-1163,

4ef58dae-42ce-11e5-9981-28967a9fad86:1-1163

+-------------+--------------------------------------+

| @@server_id | @@server_uuid |

+-------------+--------------------------------------+

| 104 | 4a72292c-42ce-11e5-9b80-e17cc877fb87 |

+-------------+--------------------------------------+

*************************** 1. row ***************************

@@global.gtid_executed: 3d9dec68-42ce-11e5-86be-185460e24dd9:1-1163,

41918672-42ce-11e5-ae5e-27905d218c5f:1-1163,

45f1991e-42ce-11e5-a853-1f71d8e7014b:1-3,

4a72292c-42ce-11e5-9b80-e17cc877fb87:1-1163,

4ef58dae-42ce-11e5-9981-28967a9fad86:1-1163

+-------------+--------------------------------------+

| @@server_id | @@server_uuid |

+-------------+--------------------------------------+

| 105 | 4ef58dae-42ce-11e5-9981-28967a9fad86 |

+-------------+--------------------------------------+

*************************** 1. row ***************************

@@global.gtid_executed: 3d9dec68-42ce-11e5-86be-185460e24dd9:1-1163,

41918672-42ce-11e5-ae5e-27905d218c5f:1-1163,

45f1991e-42ce-11e5-a853-1f71d8e7014b:1-3,

4a72292c-42ce-11e5-9b80-e17cc877fb87:1-1163,

4ef58dae-42ce-11e5-9981-28967a9fad86:1-1163

Hybrid topologies

What we have seen so far are clean cut topologies, where all our servers fit into a simple definition, be it master-slave,fan-in, all-masters, or star. But with the elements that we have examined, we can create topologies that are a mix of the simple ones.The ingredients to create your own customized deployments are the following:

- GTID: don't leave home without it! When dealing with complex topologies, knowing where your data comes from is paramount.

- Point-to-point connections, the ones introduced by multi-source enhancements in MySQL 5.7 and MariaDB 10: You should never mix old fashion connections (with nameless channels: see MySQL replication in action - Part 2 - Fan-in topology)

- log-slave-updates: by using this property wisely, you will create your own hubs when needed.

Figure 5 - A hybrid topology including 1 star, 2 P2P, and 2 simple slaves

In Figure 5, node #4 is connected with node #5 in P2P all-masters. Neither node has log-slave-updated enabled. From node #4 we also have a simple slave (node #6).

We have a mirror deployment with nodes #2, #7, and #8, but this time the connection node has log-slave-updated enabled.

If you want to try this at home, you can use a proof-of-concept from mysql-replication-samples. Here is the relevant chunk from that script:

./node1/use -e "CHANGE MASTER TO master_host='127.0.0.1', master_user='rsandbox', master_password='rsandbox', master_port=8381, MASTER_AUTO_POSITION=1 for channel 'hub_node1'"

./node3/use -e "CHANGE MASTER TO master_host='127.0.0.1', master_user='rsandbox', master_password='rsandbox', master_port=8379, MASTER_AUTO_POSITION=1 for channel 'node1_hub'"

./node3/use -e "START SLAVE FOR CHANNEL 'node1_hub'"

./node1/use -e "START SLAVE FOR CHANNEL 'hub_node1'"

# node node2 port: 8380

./node2/use -e "CHANGE MASTER TO master_host='127.0.0.1', master_user='rsandbox', master_password='rsandbox', master_port=8381, MASTER_AUTO_POSITION=1 for channel 'hub_node2'"

./node3/use -e "CHANGE MASTER TO master_host='127.0.0.1', master_user='rsandbox', master_password='rsandbox', master_port=8380, MASTER_AUTO_POSITION=1 for channel 'node2_hub'"

./node3/use -e "START SLAVE FOR CHANNEL 'node2_hub'"

./node2/use -e "START SLAVE FOR CHANNEL 'hub_node2'"

# node node4 port: 8382

./node4/use -e "CHANGE MASTER TO master_host='127.0.0.1', master_user='rsandbox', master_password='rsandbox', master_port=8381, MASTER_AUTO_POSITION=1 for channel 'hub_node4'"

./node3/use -e "CHANGE MASTER TO master_host='127.0.0.1', master_user='rsandbox', master_password='rsandbox', master_port=8382, MASTER_AUTO_POSITION=1 for channel 'node4_hub'"

./node3/use -e "START SLAVE FOR CHANNEL 'node4_hub'"

./node4/use -e "START SLAVE FOR CHANNEL 'hub_node4'"

# node node5 port: 8383

./node5/use -e "CHANGE MASTER TO master_host='127.0.0.1', master_user='rsandbox', master_password='rsandbox', master_port=8382, MASTER_AUTO_POSITION=1 for channel 'node4_node5'"

./node4/use -e "CHANGE MASTER TO master_host='127.0.0.1', master_user='rsandbox', master_password='rsandbox', master_port=8383, MASTER_AUTO_POSITION=1 for channel 'node5_node4'"

./node4/use -e "START SLAVE FOR CHANNEL 'node5_node4'"

./node5/use -e "START SLAVE FOR CHANNEL 'node4_node5'"

# node node6 port: 8384

./node6/use -e "CHANGE MASTER TO master_host='127.0.0.1', master_user='rsandbox', master_password='rsandbox', master_port=8382, MASTER_AUTO_POSITION=1 for channel 'node4_node6'"

./node6/use -e "START SLAVE FOR CHANNEL 'node4_node6'"

# node node7 port: 8385

./node7/use -e "CHANGE MASTER TO master_host='127.0.0.1', master_user='rsandbox', master_password='rsandbox', master_port=8380, MASTER_AUTO_POSITION=1 for channel 'node2_node7'"

./node2/use -e "CHANGE MASTER TO master_host='127.0.0.1', master_user='rsandbox', master_password='rsandbox', master_port=8385, MASTER_AUTO_POSITION=1 for channel 'node7_node2'"

./node7/use -e "START SLAVE FOR CHANNEL 'node2_node7'"

./node2/use -e "START SLAVE FOR CHANNEL 'node7_node2'"

# node node8 port: 8386

./node8/use -e "CHANGE MASTER TO master_host='127.0.0.1', master_user='rsandbox', master_password='rsandbox', master_port=8380, MASTER_AUTO_POSITION=1 for channel 'node2_node8'"

./node8/use -e "START SLAVE FOR CHANNEL 'node2_node8'"

# server: 1:

t1 # (created)

t2 # host2-host3-host1

t3 # host3-host1

t4 # host4-host3-host1

t7 # host7-host2-host3-host1

# server: 2:

t1 # host1-host3-host2

t2 # (created)

t3 # host3-host2

t4 # host4-host3-host2

t7 # host7-host2

# server: 3:

t1 # host1-host3

t2 # host2-host3

t3 # (created)

t4 # host4-host3

t7 # host7-host2-host3

# server: 4:

t1 # host1-host3-host4

t2 # host2-host3-host4

t3 # host3-host4

t4 # (created)

t5 # host5-host4

t7 # host7-host2-host3-host4

# server: 5:

t4 # host4-host5

t5 # (created)

# server: 6:

t4 # host4

# server: 7:

t1 # host1-host3-host2-host7

t2 # host2-host7

t3 # host3-host2-host7

t4 # host4-host3-host2-host7

t7 # (created)

# server: 8:

t1 # host1-host3-host2-host8

t2 # host2-host3-host2-host8

t3 # host3-host2-host8

t4 # host4-host3-host2-host8

t7 # host7-host2-host8

Conversely, in node #7 and #8 we get everything from every master, except from node #5, because it lacks the pass-through of log-slave-update in node #4, its connection to the cluster.

Figure 6 - A hybrid topology including 3 P2P, and 2 simple slaves

In a similar way, we can extend a all-masters P2P topology to have 2 more nodes in master-to-master replication with one node (Figure 6). The outcome of this deployment will be the same seen for Figure 5, with the difference that this topology requires more connections.

Figure 7 - A hybrid topology including 2 P2P clusters joined by a node.

Using the same principle, we can join two clusters by enabling log-slave-updates in one of their nodes and putting these enhanced nodes in P2P replication. In Figure 7 you see how you may join two all-masters P2P topologies.

Figure 8 - A hybrid topology including 2 star clusters joined by their hubs.

Joining two star topologies does not require any extra operations except running CHANGE MASTER TO, because the hubs are already enabled for dispatching events.

Figure 9 - A hybrid topology including 1 P2P cluster and a star cluster joined by a node.

Finally, you can join two different topologies by linking one node from each, like in figure 9.

Summing up

Star topologies are an interesting alternative to more robust deployments. They require more maintenance efforts in case of a failure, but they are agile and easy to expand.Setting up a star topology makes us understand the building blocks of complex topologies. Armed with this knowledge, we can create hybrid topologies that fit our needs.

No comments:

Post a Comment