| We have been busy, at Continuent. In addition to our usual work with high performance replication, we have addressed usability issues, since we know that a hard-to-use problem, no matter how powerful, has low adoption. Thus, is with some personal satisfaction that I can announce the release of Tungsten Replicator 2.0.3, which comes with several huge improvements in matter of user friendliness. The new installation procedure is so user friendly, in fact, that I was able to build a sophisticated tungsten-sandbox with a 150-line shell script. (The corresponding features for MySQL Sandbox required 4,500 lines of Perl). |

Enough self celebration, though. Let's get started, as the title of this post suggests, with the practical steps.

Requirements

Before we begin, there are a few requirements to meet.

- You need to be on a Unix-like operating system. Our main choice is Linux. If you want to test on Mac OSX, it works, but we won't recommend it.

- Java JRE must be installed. And it must be the original one, not the Open JDK. Update: The requirement against OpenJDK has been lifted. It works fine in my tests.

- Ruby 1.8 must be installed. This is mainly needed during the installation phase only, but it is required nonetheless.

- The user account that will install and run Tungsten must have ssh access to the other hosts involved in the cluster

- The above mentioned user must have sudo access. This is only needed if you want to use Tungsten Replicator to run backups that involve root access (like xtrabackup). We may lift this requirement later, but for now you need to enable it, at least during the installation, and remove the access when you are done.

- This user must also have read access to MySQL binary logs. Usually you achieve this by making sure that the binary logs are readable by users belonging to the "mysql" group, and by adding such group to your user.

- There must be a MySQL users for Tungsten replication. This user must have full access to the database server, with grant option.

- The MySQL server must have binary logging enabled.

- If you have MySQL native replication running, you must stop it.

Getting the code and install

The code is released in the

downloads section of Tungsten's home. The current recommended version is 2.0.3, but if you like to be really up to date, we also publish a

list of recent builds from our build server, which you can use to have a go at the replicator. For this simple installation, I will use four servers from our server farm. The servers are named R1, R2, R3, and R4. The first good news of the new installation process is this:

you need to install in one server only!. More details follow. First off, create a directory where you want to install. Use a

non-root account. Just make sure that it's the same user in all the servers, and that such user can access the directory where you want to install. I am going to call this directory

planet.

cd $HOME

for N in 1 2 3 4 ; do ssh r$N mkdir planet ; done

cd planet

wget http://tungsten-replicator.googlecode.com/files/tungsten-replicator-2.0.3.tar.gz

tar -xzf tungsten-replicator-2.0.3.tar.gz

cd tungsten-replicator-2.0.3

I have already a MySQL user named

tungsten with password "mypwd" (but it can be anything you like, as long as it has the required privileges). Now we have all the components. If you have read the Tungsten documentation, please

ignore the ./configure script. That is left for compatibility reasons, and will be deprecated soon. Instead, to install the cluster of our 4 servers, let's do the following:

export TUNGSTEN_BASE=$HOME/planet

./tools/tungsten-installer \

--master-slave \

--master-host=r1 \

--datasource-user=tungsten \

--datasource-password=mypwd \

--service-name=dragon \

--home-directory=$TUNGSTEN_BASE \

--cluster-hosts=r1,r2,r3,r4 \

--start

Some comment on this command:

--master-slave is the installation mode (see below for more info).

--service-name can be anything you want.

--home-directory is where all the installation sub directories will go.

--cluster-hosts is the list of servers you want to install, and finally,

--master-host is the host that will be installed as a master, while all the others will be slaves of that one. If you have followed the instructions carefully, the installer will bring up the Tungsten cluster without any fuss, Unix style. If you hate silent installations, you can get the full monty by adding some options:

./tools/tungsten-installer \

--verbose \

--master-slave \

--master-host=r1 \

--datasource-user=tungsten \

--datasource-password=mypwd \

--service-name=dragon \

--home-directory=$TUNGSTEN_BASE \

--cluster-hosts=r1,r2,r3,r4 \

--start-and-report

If you run the installer in verbose mode, you will see an extremely long list of validation checks that the installed does on your current servers and on the ones that are listed in the --cluster-hosts option. If everything went well, you will find the following directories in $HOME/planet (for all servers in your cluster):

- configs, containing the configuration file created by the installer. This file describes your cluster

- releases, containing the Tungsten binaries.

- thl, containing Tungsten's Transaction History Logs. These logs are like MySQL binary logs, but with much more metadata, including a global transaction ID, which is missing in MySQL native replication.

- relay, which should be empty, unless you install in "direct" mode (see below.)

- tungsten, which is a symlink to the Tungsten directory inside releases.

In addition to the above mentioned directories, Tungsten Replicator creates a database for each service. Since we have only one service in this topology, you will find a database named "tungsten_dragon". (If you have called your service "bunny", you will instead find "tungsten_bunny"). Inside this database there is the replication metadata necessary for making the servers fault tolerant. Only a small amount of data is kept on that database. It's roughly corresponding to what you get from the .info files in MySQL native replication. To test that the system is OK, let's find our tools. The first one is

trepctl, which, among other things, can give us an overview of the running services.

cd $HOME/planet

./tungsten/tungsten-replicator/bin/trepctl services

Processing services command...

NAME VALUE

---- -----

appliedLastSeqno: 0

appliedLatency : 1.152

role : slave

serviceName : dragon

serviceType : local

started : true

state : ONLINE

Finished services command...

./tungsten/tungsten-replicator/bin/trepctl -host r1 services

Processing services command...

NAME VALUE

---- -----

appliedLastSeqno: 0

appliedLatency : 0.936

role : master

serviceName : dragon

serviceType : local

started : true

state : ONLINE

Finished services command...

mysql -h r1 -e 'create schema if not exists test'

mysql -h r1 -e 'create table test.t1 (i int)'

./tungsten/tungsten-replicator/bin/trepctl services

Processing services command...

NAME VALUE

---- -----

appliedLastSeqno: 2

appliedLatency : 0.155

role : slave

serviceName : dragon

serviceType : local

started : true

state : ONLINE

Finished services command...

./tungsten/tungsten-replicator/bin/trepctl -host r1 services

Processing services command...

NAME VALUE

---- -----

appliedLastSeqno: 2

appliedLatency : 0.044

role : master

serviceName : dragon

serviceType : local

started : true

state : ONLINE

Finished services command...

After the installation, trepctl reported the last applied sequence number (appliedLastSeqno) as 0. Following the execution of two commands in the master, such number became 2. If you want to know more of what was happening, you can use the

thl command. This corresponds roughly to using mysqlbinlog with MySQL native replication logs.

/tungsten/tungsten-replicator/bin/thl -service dragon list |less

SEQ# = 1 / FRAG# = 0 (last frag)

- TIME = 2011-06-09 14:51:23.0

- EVENTID = 000002:0000000000000514;197609

- SOURCEID = qa.r1.continuent.com

- STATUS = COMPLETED(2)

- METADATA = [mysql_server_id=10;service=dragon;shard=test]

- TYPE = com.continuent.tungsten.replicator.event.ReplDBMSEvent

- OPTIONS = [##charset = ISO8859_1, createOrDropDB = , autocommit = 1, sql_auto_is_null = 1, foreign_key_checks = 1, unique_checks = 1, sql_mode = '', character_set_client = 8, collation_connection = 8, collation_server = 8]

- SQL(0) = create schema if not exists test /* ___SERVICE___ = [dragon] */

SEQ# = 2 / FRAG# = 0 (last frag)

- TIME = 2011-06-09 14:51:30.0

- EVENTID = 000002:0000000000000601;197610

- SOURCEID = qa.r1.continuent.com

- STATUS = COMPLETED(2)

- METADATA = [mysql_server_id=10;service=dragon;shard=test]

- TYPE = com.continuent.tungsten.replicator.event.ReplDBMSEvent

- OPTIONS = [##charset = ISO8859_1, autocommit = 1, sql_auto_is_null = 1, foreign_key_checks = 1, unique_checks = 1, sql_mode = '', character_set_client = 8, collation_connection = 8, collation_server = 8]

- SCHEMA =

- SQL(0) = create table test.t1 (i int) /* ___SERVICE___ = [dragon] */

Once we are satisfied that replication is working, we can clean up the cluster and try other installation experiments. To clean up a cluster, you need to do the following:

- stop the replicator in all servers.

for N in 1 2 3 4; do $PWD/tungsten/tungsten-replicator/bin/replicator stop; done

- remove the thl files from all servers.

- remove the tungsten_SERVICE_NAME database from all mysql servers

- run a "reset master" in the master database

- remove the directories created by the installer in all servers

Installation types

The procedure described above was, until a few months ago, the only thing you could do with Tungsten. Now you can broaden your horizons with a wider range of possibilities.

Master/slave is of course the main option, and it's the one that you have seen in the previous section. This method gives you the full set of Tungsten features and performance. It is the recommended method for production use and for benchmarking. In this scenario, the Tungsten replicator on the master will extract transactions from the binary log, transfer them to the THL, and share it with the slaves. The slaves will read from the THL and apply the transactions to the database. There are a few steps more in between, but for the sake of brevity I will skip them You can have a look at

Robert Hodges blog for more info.

Slave "direct" is the alternative that you can use in production, and it's been designed to satisfy users who only want some particular benefits on the slave side, and don't care about global transaction IDs. If you are looking at parallel apply, this is probably a setup that you want to try. In this scenario, there is no replicator on the master. The slave pulls data remotely from the binary logs, copies them locally, and extracts data to the THL. Here's an example of how to start a slave-direct system:

./tools/tungsten-installer \

--direct \

--master-host=r1 \

--slave-host=r4 \

--master-user=tungsten \

--slave-user=tungsten \

--master-password=secret \

--slave-password=secret \

--service-name=Castor \

--thl-port=21124 \

--rmi-port=10104 \

--channels=5 \

--home-directory=$TUNGSTEN_BASE \

--start-and-report

If your purpose is testing Tungsten, probably the Tungsten Sandbox is what you should try. This system is based on

MySQL Sandbox, a framework that lets you install more than one MySQL server in the same host. Building on top of MySQL Sandbox, and leveraging the new flexibility in Tungsten installer,

tungsten-sandbox allows you to build a master/slave system inside a single host. Let's give it a try. You need to have MySQL Sandbox installed, and at least one MySQL tarball expanded under $HOME/opt/mysql/X.X.XX (where X.X.XX is the MySQL version, such as 5.5.12).

cd $HOME/planet

mkdir sb

cd tungsten-replicator-2.0.3

wget http://tungsten-replicator.googlecode.com/files/tungsten-sandbox

./tungsten-sandbox -h

USAGE: ./tungsten-sandbox [flags] args

flags:

-n,--nodes: how many nodes to install (default: 3)

-m,--mysql_version: which MySQL version to use (default: '5.1.56')

-t,--tungsten_base: where to install the sandbox (default: '/home/tungsten/tsb2')

-d,--group_dir: sandbox group directory name (default: 'tr_dbs')

-s,--service: how the service is named (default: 'tsandbox')

-P,--base_port: port base for MySQL sandbox nodes (default: 710)

-l,--thl_port: port for the THL service (default: 1211)

-r,--rmi_port: port for the RMI service (default: 1010)

-v,--[no]version: show Tungsten sandbox version (default: false)

-h,--[no]help: show Tungsten sandbox help (default: false)

In my server, I have already expanded MySQL 5.5.10, and I want to install inside $HOME/tsb. So, here is what I do:

./tungsten-sandbox -m 5.5.10 -t ~/tsb

This command installs three instances of MySQL under $HOME/sandboxes and three of Tungsten under $HOME/tsb. Inside this directory, in addition to the running instances, we find some more goodies:

- clear_all send_kill_all start_all status_all stop_all use_all : symlinks to the corresponding commands in MySQL sandbox

- db1 db2 db3: these are the tungsten instances.

- n1 n2 n3: quick links to access each MySQL node

- replicator_all trepctl_all: utilities that run "replicator" or "trepctl" for each node with the arguments provided on the command line

Additionally, there are a few scripts inside each Tungsten instance in the sandbox:

- trepctl: a link to the deep down trepctl

- thl: a link to the thl utility

- show_log: a quick way of showing the replicator log

Since this is a tool for testing, removing it is going to be easy.

~/tsb/replicator_all stop

~/tsb/clear_all

rm -rf ~/tsb/*

A final method of installing is a sandbox with ths slave-direct method. There is no dedicated script for this method, but thanks to the new installer, you can get the job done quite easily:

export NODE_OPTIONS='-c innodb_flush_log_at_trx_commit=2 -c max_allowed_packet=48M'

make_multiple_sandbox --group_directory=tr_dbs --sandbox_base_port=7100 5.5.10

TUNGSTEN_BASE=$HOME/tinstall/tsb/

./tools/tungsten-installer \

--direct \

--master-host=127.0.0.1 \

--master-port=7101 \

--slave-host=db2 \

--slave-port=7102 \

--master-user=root \

--slave-user=root \

--master-password=msandbox \

--slave-password=msandbox \

--master-log-directory=$HOME/sandboxes/tr_dbs/node1/data \

--service-name=Castor \

--thl-port=12112 \

--channels=5 \

--rmi-port=20000 \

--home-directory=$TUNGSTEN_BASE \

./tools/tungsten-installer \

--direct \

--master-host=127.0.0.1 \

--master-port=7101 \

--slave-host=db3 \

--slave-port=7103 \

--master-user=root \

--slave-user=root \

--master-password=msandbox \

--slave-password=msandbox \

--master-log-directory=$HOME/sandboxes/tr_dbs/node1/data \

--service-name=Pollux \

--thl-port=22112 \

--channels=1 \

--rmi-port=20000 \

--home-directory=$TUNGSTEN_BASE \

--relay-directory=$TUNGSTEN_BASE/relay --start-and-report

This script creates two services (Castor and Pollux), with only one instance of Tungsten replicator, with all the servers (MySQL and Tungsten ones) in the same host.

Conclusions

There should be much more to say, but I will leave it for the coming days. In the meantime, I encourage everyone to try the new Tungsten and submit

bug reports when things don't work as expected. As always, happy hacking!

P.S. Today at 10am PT there is a

webinar on this very topic!

Notice that the recipe works in exactly the same way for two distinct clusters on separate hosts. We are using one sandbox to minimize the number of hosts involved.

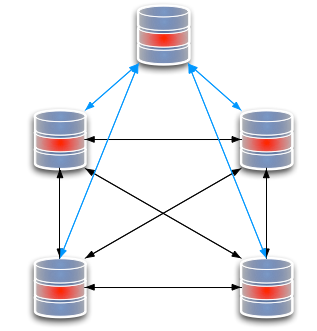

To install the bridge between the two cluster, you go to the directory where Tungsten was installed for the master in the sandbox, and run ./tools/configure-service. The purpose of this command is to create a second service in the master, a service that will act as a slave, and fetch data from the other cluster.

To do so, we need to provide some information to the service installer, the most important of which are:

Notice that the recipe works in exactly the same way for two distinct clusters on separate hosts. We are using one sandbox to minimize the number of hosts involved.

To install the bridge between the two cluster, you go to the directory where Tungsten was installed for the master in the sandbox, and run ./tools/configure-service. The purpose of this command is to create a second service in the master, a service that will act as a slave, and fetch data from the other cluster.

To do so, we need to provide some information to the service installer, the most important of which are:

Using a very similar command, I can connect to a slave of the first cluster, instead of the master, and the final result will be exactly the same.

Using a very similar command, I can connect to a slave of the first cluster, instead of the master, and the final result will be exactly the same.

The first step is to install one master in each node. I can run the commands from node #4, which is the one that will eventually receive the updates from the remote masters, and where I need to install the slave services:

The first step is to install one master in each node. I can run the commands from node #4, which is the one that will eventually receive the updates from the remote masters, and where I need to install the slave services: