Previous episodes:

Figure 1 - Single applier

Until 2011, there was little remedy to this problem. Then we saw the first implementation of parallel apply, provided by Tungsten Replicator. The parallelism is schema-based, and provides 5x improvement on the slave performance.

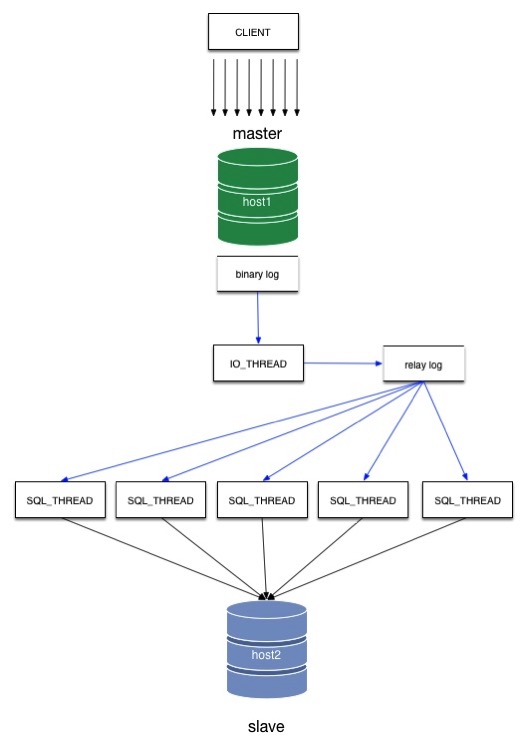

Shortly after that, the same algorithm of schema-based parallel applier was implemented —with the same performance gain— in MySQL 5.6 (Figure 2). This implementation is valuable for those organizations that have data split by schemas, which can happen for those companies that have adopted sharding of data and split their logical chunks in different schemas, or for those companies that run multi-tenant services, where splitting data by schema is a necessity. This solution does not meet the needs of all users. Many systems are based on a single very active schema and perhaps a few ancillary ones with minimal traffic. For those users, parallel replication by schema is useless.

Figure 2 - Parallel applier

In more recent times, we have seen three more implementations:

To monitor, however, we need some action to look at. In the same mysql-replicator-samples there are a few scripts that can generate load for multiple databases (multi_inserts.sh) or for many tables in the same database (multi_inserts_one_db.sh). We will use the first one in MySQL 5.7 to check the default algorithm (parallelism by schema) and the second script for mariadb, again to test its default algorithm, which can run parallel appliers in the same schema. As I mentioned before, we are not comparing performance here: we just want to see what we can detect when parallel replication is running.

The scripts are nothing fancy. They will generate lots of concurrent inserts in many tables. This is enough to see all the parallel workers busy. You can use your favorite stress test instead of these simple scripts.

Next, we look at the table dedicated to parallel replication in performance_schema.

Finally, we have a look at the output of SHOW SLAVE STATUS. In the past episodes, here is where we had the most comprehensive set of information. But with parallel replication we are going to have a surprise.

Let's have a look at SHOW SLAVE STATUS. The good news is that we don't have the horrible crowd seen in MySQL 5.7. The bad news is that we have just as much information that we would get with a single thread. And let's not forget that the only info about GTID in the slave status is related to the IO thread. For the SQL threads (in this case, we have 10) we need to look at the above table or the GTID variables.

In MariaDB, the lack of monitoring data is so acute that I would be very reluctant to use or recommend it for production.

It's a start. I hope that both teams will pause a bit in their frenzy to add more features, and will strengthen up the existing ones instead.

I am speaking at Percona Live Amsterdam 2015 on September 22, and I will cover all these topics with examples.

I would like to remind everyone that there is a public project on GitHub with mysql-replication-samples. I am sure there could be plenty of other scripts that could be added. Participation is welcome!

- MySQL replication monitoring 101

- MySQL replication in action - Part 1: GTID & Co

- MySQL replication in action - Part 2 - Fan-in topology

- MySQL replication in action - Part 3 - All-masters P2P topology

- MySQL replication in action - Part 4 - star and hybrid topologies

Parallel replication overview

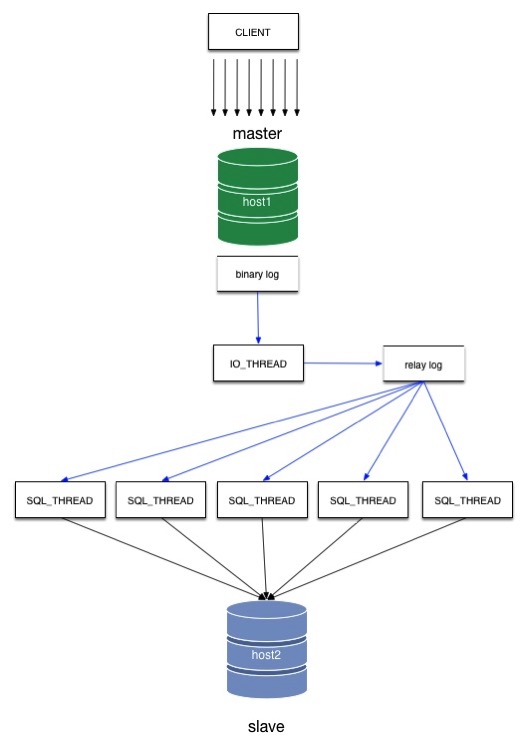

One of the main grievance of replication users is that, while a well tuned master server can handle thousands of concurrent operations, an equally tuned slave is constrained to work on a single thread. In Figure 1, we see the schematics of this paradigm. Multiple operations on the master are executed simultaneously and saved to the binary log. The slave IO thread copies the binary log events to a local log, and on such log the SQL thread executes the events on the slave database. When the master is very active, chances are that the slave lags behind, causing hatred and nightmares to the DBAs.

Figure 1 - Single applier

Until 2011, there was little remedy to this problem. Then we saw the first implementation of parallel apply, provided by Tungsten Replicator. The parallelism is schema-based, and provides 5x improvement on the slave performance.

Shortly after that, the same algorithm of schema-based parallel applier was implemented —with the same performance gain— in MySQL 5.6 (Figure 2). This implementation is valuable for those organizations that have data split by schemas, which can happen for those companies that have adopted sharding of data and split their logical chunks in different schemas, or for those companies that run multi-tenant services, where splitting data by schema is a necessity. This solution does not meet the needs of all users. Many systems are based on a single very active schema and perhaps a few ancillary ones with minimal traffic. For those users, parallel replication by schema is useless.

Figure 2 - Parallel applier

In more recent times, we have seen three more implementations:

- In MariaDB 10, parallel appliers are based on information generated by the master when using group commit;

- In MySQL 5.7, a new parallelism mode was introduced, based on the events logical clock: the events that have the same timestamp are safe to execute in parallel.

- In MariaDB 10.1, there is an optimistic mode that breaks the commit order that was guaranteed by the group-commit algorithm, and allows higher performance.

Setting up Parallel replication

Compared to what we had to do for multi-source, the setup is not hard. There is a variable that we need to change. The name is different in MySQL 5.6/5.7 and MariaDB 10. As it happens for multi source, we can set-up the functionality using one of the mysql-replication-samples scripts on GitHub.Setup in MySQL 5.7

When we run the script install_parallel.sh, what happens is that the slave gets stopped, the parallel workers variable is increased, and then the slave restarts. A look at show processlist in the slave tells us that there are now 10 processes waiting for work.+------------------------+-------+

| Variable_name | Value |

+------------------------+-------+

| slave_parallel_workers | 10 |

+------------------------+-------+

+----+-------------+-----------+------+---------+------+---------------------------------------+------------------+

| Id | User | Host | db | Command | Time | State | Info |

+----+-------------+-----------+------+---------+------+---------------------------------------+------------------+

| 9 | system user | | NULL | Connect | 0 | Waiting for master to send event | NULL |

| 10 | system user | | NULL | Connect | 0 | Reading event from the relay log | NULL |

| 11 | system user | | NULL | Connect | 0 | Waiting for an event from Coordinator | NULL |

| 12 | system user | | NULL | Connect | 0 | Waiting for an event from Coordinator | NULL |

| 13 | system user | | NULL | Connect | 0 | Waiting for an event from Coordinator | NULL |

| 14 | system user | | NULL | Connect | 0 | Waiting for an event from Coordinator | NULL |

| 15 | system user | | NULL | Connect | 0 | Waiting for an event from Coordinator | NULL |

| 16 | system user | | NULL | Connect | 0 | Waiting for an event from Coordinator | NULL |

| 17 | system user | | NULL | Connect | 0 | Waiting for an event from Coordinator | NULL |

| 18 | system user | | NULL | Connect | 0 | Waiting for an event from Coordinator | NULL |

| 19 | system user | | NULL | Connect | 0 | Waiting for an event from Coordinator | NULL |

| 20 | system user | | NULL | Connect | 0 | Waiting for an event from Coordinator | NULL |

| 22 | msandbox | localhost | NULL | Query | 0 | starting | show processlist |

+----+-------------+-----------+------+---------+------+---------------------------------------+------------------+

setup in MariaDB 10

Same story in MariaDB 10. Stop the slave, change the variable, restart the slave. The process list shows willing workers.+------------------------+-------+

| Variable_name | Value |

+------------------------+-------+

| slave_parallel_threads | 10 |

+------------------------+-------+

+----+-------------+-----------+------+---------+------+------------------------------------+------------------+----------+

| Id | User | Host | db | Command | Time | State | Info | Progress |

+----+-------------+-----------+------+---------+------+------------------------------------+------------------+----------+

| 10 | system user | | NULL | Connect | 0 | Waiting for master to send event | NULL | 0.000 |

| 11 | system user | | NULL | Connect | 0 | Waiting for work from SQL thread | NULL | 0.000 |

| 12 | system user | | NULL | Connect | 0 | Waiting for work from SQL thread | NULL | 0.000 |

| 13 | system user | | NULL | Connect | 0 | Waiting for work from SQL thread | NULL | 0.000 |

| 14 | system user | | NULL | Connect | 0 | Waiting for work from SQL thread | NULL | 0.000 |

| 15 | system user | | NULL | Connect | 0 | Waiting for work from SQL thread | NULL | 0.000 |

| 16 | system user | | NULL | Connect | 0 | Waiting for work from SQL thread | NULL | 0.000 |

| 17 | system user | | NULL | Connect | 0 | Waiting for work from SQL thread | NULL | 0.000 |

| 18 | system user | | NULL | Connect | 0 | Waiting for work from SQL thread | NULL | 0.000 |

| 19 | system user | | NULL | Connect | 0 | Waiting for work from SQL thread | NULL | 0.000 |

| 20 | system user | | NULL | Connect | 0 | Waiting for work from SQL thread | NULL | 0.000 |

| 21 | system user | | NULL | Connect | 0 | Slave has read all relay log; wait | NULL | 0.000 |

| 23 | msandbox | localhost | NULL | Query | 0 | init | show processlist | 0.000 |

+----+-------------+-----------+------+---------+------+------------------------------------+------------------+----------+

Monitoring parallel replication

After the setup, the interesting operations can start. We need to know what we can get from the monitoring facilities.To monitor, however, we need some action to look at. In the same mysql-replicator-samples there are a few scripts that can generate load for multiple databases (multi_inserts.sh) or for many tables in the same database (multi_inserts_one_db.sh). We will use the first one in MySQL 5.7 to check the default algorithm (parallelism by schema) and the second script for mariadb, again to test its default algorithm, which can run parallel appliers in the same schema. As I mentioned before, we are not comparing performance here: we just want to see what we can detect when parallel replication is running.

The scripts are nothing fancy. They will generate lots of concurrent inserts in many tables. This is enough to see all the parallel workers busy. You can use your favorite stress test instead of these simple scripts.

Monitoring in MySQL 5.7

Let's start with MySQL 5.7, where we have two tables dedicated to parallel replication monitoring. The first one, in the mysql schema, can show the progress of work on binary logs and relay logs. There is no mention of GTIDs. And there is no mention of database. For a system where parallelism can happen by schema, this looks like insufficient design. Another thing that is important to notice is the field Checkpoint_group_bitmap, of which I ignore the purpose, but is very prominent when you select the table contents, because this column is filled with non-printable characters. For this reason, we will select just a few columns, to see the basic progress.slave1 [localhost] {msandbox} (mysql) > desc slave_worker_info;

+----------------------------+---------------------+------+-----+---------+-------+

| Field | Type | Null | Key | Default | Extra |

+----------------------------+---------------------+------+-----+---------+-------+

| Id | int(10) unsigned | NO | PRI | NULL | |

| Relay_log_name | text | NO | | NULL | |

| Relay_log_pos | bigint(20) unsigned | NO | | NULL | |

| Master_log_name | text | NO | | NULL | |

| Master_log_pos | bigint(20) unsigned | NO | | NULL | |

| Checkpoint_relay_log_name | text | NO | | NULL | |

| Checkpoint_relay_log_pos | bigint(20) unsigned | NO | | NULL | |

| Checkpoint_master_log_name | text | NO | | NULL | |

| Checkpoint_master_log_pos | bigint(20) unsigned | NO | | NULL | |

| Checkpoint_seqno | int(10) unsigned | NO | | NULL | |

| Checkpoint_group_size | int(10) unsigned | NO | | NULL | |

| Checkpoint_group_bitmap | blob | NO | | NULL | |

| Channel_name | char(64) | NO | PRI | NULL | |

+----------------------------+---------------------+------+-----+---------+-------+

13 rows in set (0.00 sec)

slave1 [localhost] {msandbox} (mysql) > select ID,Relay_log_name,Relay_log_pos,Master_log_name,Master_log_pos from slave_worker_info;

+----+----------------------+---------------+------------------+----------------+

| ID | Relay_log_name | Relay_log_pos | Master_log_name | Master_log_pos |

+----+----------------------+---------------+------------------+----------------+

| 1 | ./mysql-relay.000003 | 33029530 | mysql-bin.000002 | 33029317 |

| 2 | ./mysql-relay.000003 | 33066066 | mysql-bin.000002 | 33065853 |

| 3 | ./mysql-relay.000003 | 33111090 | mysql-bin.000002 | 33110877 |

| 4 | ./mysql-relay.000003 | 33107225 | mysql-bin.000002 | 33107012 |

| 5 | ./mysql-relay.000003 | 33059630 | mysql-bin.000002 | 33059417 |

| 6 | ./mysql-relay.000003 | 33056541 | mysql-bin.000002 | 33056328 |

| 7 | ./mysql-relay.000003 | 33086391 | mysql-bin.000002 | 33086178 |

| 8 | ./mysql-relay.000003 | 33018457 | mysql-bin.000002 | 33018244 |

| 9 | ./mysql-relay.000003 | 33052167 | mysql-bin.000002 | 33051954 |

| 10 | ./mysql-relay.000003 | 33044450 | mysql-bin.000002 | 33044237 |

+----+----------------------+---------------+------------------+----------------+

10 rows in set (0.00 sec)

Next, we look at the table dedicated to parallel replication in performance_schema.

slave1 [localhost] {msandbox} (mysql) > use performance_schema

Database changed

slave1 [localhost] {msandbox} (performance_schema) > desc replication_applier_status_by_worker;

+-----------------------+---------------------+------+-----+-------------------+-----------------------------+

| Field | Type | Null | Key | Default | Extra |

+-----------------------+---------------------+------+-----+-------------------+-----------------------------+

| CHANNEL_NAME | char(64) | NO | | NULL | |

| WORKER_ID | bigint(20) unsigned | NO | | NULL | |

| THREAD_ID | bigint(20) unsigned | YES | | NULL | |

| SERVICE_STATE | enum('ON','OFF') | NO | | NULL | |

| LAST_SEEN_TRANSACTION | char(57) | NO | | NULL | |

| LAST_ERROR_NUMBER | int(11) | NO | | NULL | |

| LAST_ERROR_MESSAGE | varchar(1024) | NO | | NULL | |

| LAST_ERROR_TIMESTAMP | timestamp | NO | | CURRENT_TIMESTAMP | on update CURRENT_TIMESTAMP |

+-----------------------+---------------------+------+-----+-------------------+-----------------------------+

slave1 [localhost] {msandbox} (performance_schema) > select WORKER_ID, THREAD_ID , SERVICE_STATE , LAST_SEEN_TRANSACTION , LAST_ERROR_MESSAGE from replication_applier_status_by_worker;

+-----------+-----------+---------------+---------------------------------------------+--------------------+

| WORKER_ID | THREAD_ID | SERVICE_STATE | LAST_SEEN_TRANSACTION | LAST_ERROR_MESSAGE |

+-----------+-----------+---------------+---------------------------------------------+--------------------+

| 1 | 40 | ON | 00013253-1111-1111-1111-111111111111:133621 | |

| 2 | 41 | ON | 00013253-1111-1111-1111-111111111111:133699 | |

| 3 | 42 | ON | 00013253-1111-1111-1111-111111111111:133826 | |

| 4 | 43 | ON | 00013253-1111-1111-1111-111111111111:133919 | |

| 5 | 44 | ON | 00013253-1111-1111-1111-111111111111:133686 | |

| 6 | 45 | ON | 00013253-1111-1111-1111-111111111111:133688 | |

| 7 | 46 | ON | 00013253-1111-1111-1111-111111111111:133770 | |

| 8 | 47 | ON | 00013253-1111-1111-1111-111111111111:133494 | |

| 9 | 48 | ON | 00013253-1111-1111-1111-111111111111:133731 | |

| 10 | 49 | ON | 00013253-1111-1111-1111-111111111111:133763 | |

+-----------+-----------+---------------+---------------------------------------------+--------------------+

10 rows in set (0.00 sec)

Finally, we have a look at the output of SHOW SLAVE STATUS. In the past episodes, here is where we had the most comprehensive set of information. But with parallel replication we are going to have a surprise.

slave1 [localhost] {msandbox} (performance_schema) > SHOW SLAVE STATUS\G

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 127.0.0.1

Master_User: rsandbox

Master_Port: 13253

Connect_Retry: 60

Master_Log_File: mysql-bin.000002

Read_Master_Log_Pos: 38675840

Relay_Log_File: mysql-relay.000003

Relay_Log_Pos: 35124216

Relay_Master_Log_File: mysql-bin.000002

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Replicate_Do_DB:

Replicate_Ignore_DB:

Replicate_Do_Table:

Replicate_Ignore_Table:

Replicate_Wild_Do_Table:

Replicate_Wild_Ignore_Table:

Last_Errno: 0

Last_Error:

Skip_Counter: 0

Exec_Master_Log_Pos: 35124003

Relay_Log_Space: 38676469

Until_Condition: None

Until_Log_File:

Until_Log_Pos: 0

Master_SSL_Allowed: No

Master_SSL_CA_File:

Master_SSL_CA_Path:

Master_SSL_Cert:

Master_SSL_Cipher:

Master_SSL_Key:

Seconds_Behind_Master: 30

Master_SSL_Verify_Server_Cert: No

Last_IO_Errno: 0

Last_IO_Error:

Last_SQL_Errno: 0

Last_SQL_Error:

Replicate_Ignore_Server_Ids:

Master_Server_Id: 1

Master_UUID: 00013253-1111-1111-1111-111111111111

Master_Info_File: mysql.slave_master_info

SQL_Delay: 0

SQL_Remaining_Delay: NULL

Slave_SQL_Running_State: Reading event from the relay log

Master_Retry_Count: 86400

Master_Bind:

Last_IO_Error_Timestamp:

Last_SQL_Error_Timestamp:

Master_SSL_Crl:

Master_SSL_Crlpath:

Retrieved_Gtid_Set: 00013253-1111-1111-1111-111111111111:1-150420

Executed_Gtid_Set: 00013253-1111-1111-1111-111111111111:1-136616:136618-136624:136626-136632:

136634-136635:136637-136640:136642-136644:136646-136648:136650-136670:136672-136681:136683-136687:

136689-136703:136706-136716:136718-136720:136722-136723:136726-136727:136730:136734-136735:

136737:136739-136741:136743-136744:136746-136747:136749:136752-136753:136755:136757:136762-136763:

136765-136766:136768-136771:136773-136774:136777:136783:136785:136787-136792:136794-136795:136797:

136801:136806:136809:136812-136814:136817:136821:136824:136826:136833:136835-136837:136841-136843:

136846:136852:136862-136864:136871:136874:136878:136881:136884:136897:136901:136908:136913:136915:

136926:136928:136940:136948:136951:136968:136986:136989:136992:136995

Auto_Position: 1

Replicate_Rewrite_DB:

Channel_Name:

1 row in set (0.00 sec)

Monitoring with MariaDB 10

In MariaDb there is only one table where we can follow progress. Unlike the one in MySQL 5.6/7, there is no fixed number of rows. This is the same table where operations with GTID are stored with single thread replication. The rows are added or pruned according to the traffic in the various channels.

slave1 [localhost] {msandbox} (mysql) > select * from gtid_slave_pos;

+-----------+--------+-----------+--------+

| domain_id | sub_id | server_id | seq_no |

+-----------+--------+-----------+--------+

| 0 | 28569 | 1 | 28569 |

| 0 | 28570 | 1 | 28570 |

+-----------+--------+-----------+--------+

2 rows in set (0.00 sec)

slave1 [localhost] {msandbox} (mysql) > select * from gtid_slave_pos;

+-----------+--------+-----------+--------+

| domain_id | sub_id | server_id | seq_no |

+-----------+--------+-----------+--------+

| 0 | 42786 | 1 | 42786 |

| 0 | 42787 | 1 | 42787 |

| 0 | 42788 | 1 | 42788 |

| 0 | 42789 | 1 | 42789 |

+-----------+--------+-----------+--------+

4 rows in set (0.00 sec)

slave1 [localhost] {msandbox} (mysql) > select * from gtid_slave_pos;

+-----------+--------+-----------+--------+

| domain_id | sub_id | server_id | seq_no |

+-----------+--------+-----------+--------+

| 0 | 46807 | 1 | 46807 |

| 0 | 46808 | 1 | 46808 |

+-----------+--------+-----------+--------+

2 rows in set (0.00 sec)

Let's have a look at SHOW SLAVE STATUS. The good news is that we don't have the horrible crowd seen in MySQL 5.7. The bad news is that we have just as much information that we would get with a single thread. And let's not forget that the only info about GTID in the slave status is related to the IO thread. For the SQL threads (in this case, we have 10) we need to look at the above table or the GTID variables.

slave1 [localhost] {msandbox} ((none)) > SHOW SLAVE STATUS\G

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 127.0.0.1

Master_User: rsandbox

Master_Port: 25030

Connect_Retry: 60

Master_Log_File: mysql-bin.000001

Read_Master_Log_Pos: 958300

Relay_Log_File: mysql-relay.000002

Relay_Log_Pos: 956223

Relay_Master_Log_File: mysql-bin.000001

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Replicate_Do_DB:

Replicate_Ignore_DB:

Replicate_Do_Table:

Replicate_Ignore_Table:

Replicate_Wild_Do_Table:

Replicate_Wild_Ignore_Table:

Last_Errno: 0

Last_Error:

Skip_Counter: 0

Exec_Master_Log_Pos: 958300

Relay_Log_Space: 956516

Until_Condition: None

Until_Log_File:

Until_Log_Pos: 0

Master_SSL_Allowed: No

Master_SSL_CA_File:

Master_SSL_CA_Path:

Master_SSL_Cert:

Master_SSL_Cipher:

Master_SSL_Key:

Seconds_Behind_Master: 0

Master_SSL_Verify_Server_Cert: No

Last_IO_Errno: 0

Last_IO_Error:

Last_SQL_Errno: 0

Last_SQL_Error:

Replicate_Ignore_Server_Ids:

Master_Server_Id: 1

Master_SSL_Crl:

Master_SSL_Crlpath:

Using_Gtid: Current_Pos

Gtid_IO_Pos: 0-1-5826

1 row in set (0.00 sec)

Small improvements

About two years ago I wrote a post comparing parallel replication in MySQL 5.6 and Tungsten Replicator. Most of what was said there is still valid, but I want to acknowledge a small improvement. When an error happened in parallel replication, the message shown in my article said:Last_SQL_Error: Error 'Duplicate entry '1' for key 'PRIMARY'' on query.

Default database: 'test'. Query: 'insert into t1 values (1)'

Last_SQL_Error: Worker 8 failed executing transaction '00021891-1111-1111-1111-111111111111:90860'

at master log mysql-bin.000002, end_log_pos 23636368; Error 'Duplicate entry '340' for key 'PRIMARY'' on query.

Default database: 'db1'. Query: 'insert into t7 values (340, null)'

Summing up

Of all the new features, parallel replication is probably the most needed, and the one that falls short in matter of usability. MySQL 5.7 offers what looks at first sight a large amount of monitoring data, but in the end is not enough to run operations confidently.In MariaDB, the lack of monitoring data is so acute that I would be very reluctant to use or recommend it for production.

It's a start. I hope that both teams will pause a bit in their frenzy to add more features, and will strengthen up the existing ones instead.

What's next: Percona Live in Amsterdam!

This is the last episode in the series. There is much more to say about replication features, old and new, but the focus of these articles was on monitoring capabilities, and we've seen enough.I am speaking at Percona Live Amsterdam 2015 on September 22, and I will cover all these topics with examples.

I would like to remind everyone that there is a public project on GitHub with mysql-replication-samples. I am sure there could be plenty of other scripts that could be added. Participation is welcome!

2 comments:

The gaps you are seeing with 5.6 looks similar to something people with 5.6+GTID are still experiencing:

http://bugs.mysql.com/bug.php?id=69943

or

https://bugs.mysql.com/bug.php?id=69758

Did you run a checksum between the master and slave ?

@Joffrey,

Yes, at the end of the test, I ran a checksum, and it matched.

Post a Comment