- Automatic failover

- Cluster console, and One-Command-Operations

- transparent connections

- No VIPs !!!

- Multi site switch and failover

Automatic failover

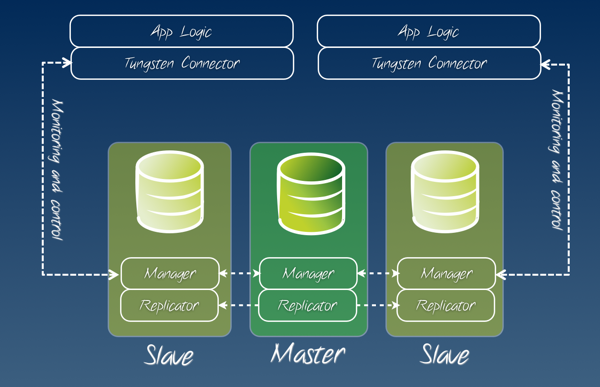

This is probably the most amazing feature of all. It is a combination of the same efficient replication technology seen in Tungsten Replicator, which uses a global transaction ID to allow a seamless failover, and a management system, made of components that communicate to each other and can replace a failed master within seconds, even under heavy load. All this, without the application having more trouble than a few seconds delay (see transparent connections below). This feature is customizable. If the manager is in "automatic" mode, it will replace a failed master without manual intervention, and it will try to put online every element that goes offline. In "manual" mode, however, it will let the user take control of operations as needed.Cluster Console, and One-Command-Operations

Tungsten Enterprise comes with a text-based console that gives immediate access to the cluster information, and lets the users perform maintenance without troubling them with the inner knowledge necessary to perform the tasks. Promoting a slave to master (a planned "switch", as opposed to an unplanned "failover") is just one command, even though behind the scenes the Tungsten Manager runs a dozen commands to complete the task safely. Backup and restore are also one command. And so are all the dozens of administrative tasks that the Tungsten Manager allows the user. The console comes with a comprehensive help that explains all commands in detail. The console allows the DBA to perform operations in any text terminal, without additional components such as a desktop application or a web interface.

Transparent connections

The suite includes a component called Tungsten Connector, which is a sort of high performance proxy between the application and the database. Instead of connecting your applications to the DBMS, you connect it to a Tungsten Connector, which looks and feels as a MySQl (or PostgreSQL) database. The difference is that, when the master changes, the connector will get notified by the Tungsten Manager and immediately re-routes the underlying connections to the appropriate server. Depending on how smart is your application, you can use the Tungsten Connector in two ways:- Static routing mode: You create one (or more) connector that will always bring you to the master, and use that connection whenever your application needs to write. And you also create one or more connectors that will always give you access to a slave, and use this one whenever your application needs to read.

- Smart mode: you ask the connector to detect what you are doing and direct your queries to the appropriate server. This mode sends all transactions and updates to the master, and every read query that is not inside a transaction to the slaves. This mode can also guarantee data consistency, by directing the reading of a just saved record to a slave that has already received that record.

No VIPs !!!

The failover and switch features are not new in the replication arena. There are tools that do something similar and keep an application connected to the same IP using virtual IPs. I don't like virtual IPs, as they are dumb stateless components between two stateful elements, and I am not the only one who dislikes them (See Virtual IP Addresses and Their Discontents for Database Availability). Using Tungsten Connector instead of a dumb virtual IP makes life so much easier. When you do a failover with a VIP, quite often the application hangs, as the client doesn't detect that the server on the other side has gone away, and thus your failover technology has to somehow identify the hanging connections and cut them: a very painful experience. Instead, the Tungsten Connector will either kill the connection immediately or reroute your query, depending on the needs, and your application doesn't get more than a hiccup.

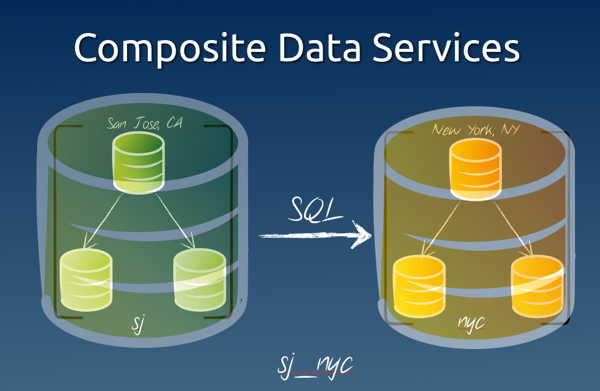

Multi site switch and failover

A recent addition to the suite is the ability of handling whole sites as single servers. The suite can create and maintain a so called composite data service, which is a cluster that is seen and treated as a single server. in a disaster recovery scenario, you want to have a functioning site in one location and a relay site in another location, ready to take over when sudden disaster strikes. Here's an example of what you can get:cctrl -multi -expert Tungsten Enterprise 1.5.0 build 426 sjc: session established [LOGICAL:EXPERT] / > ls +----------------------------------------------------------------------------+ |DATA SERVICES: | +----------------------------------------------------------------------------+ great_company nyc sjc [LOGICAL:EXPERT] / > use great_company [LOGICAL:EXPERT] /great_company > ls COORDINATOR[qa.tx6.continuent.com:AUTOMATIC:ONLINE] DATASOURCES: +----------------------------------------------------------------------------+ |nyc(composite master:ONLINE) | |STATUS [OK] [2012/03/05 10:59:28 PM CET] | +----------------------------------------------------------------------------+ +----------------------------------------------------------------------------+ |sjc(composite slave:ONLINE) | |STATUS [OK] [2012/03/05 10:59:30 PM CET] | +----------------------------------------------------------------------------+In this scenario, there is a data service called "great_company", which contains two sites that loom like regular servers. Inside each site, there is a cluster, which we can examine at will:

[LOGICAL:EXPERT] /great_company > use nyc nyc: session established [LOGICAL:EXPERT] /nyc > ls COORDINATOR[qa.tx2.continuent.com:AUTOMATIC:ONLINE] DATASOURCES: +----------------------------------------------------------------------------+ |qa.tx1.continuent.com(master:ONLINE, progress=1397, THL latency=0.857) | |STATUS [OK] [2012/03/05 10:58:42 PM CET] | +----------------------------------------------------------------------------+ | MANAGER(state=ONLINE) | | REPLICATOR(role=master, state=ONLINE) | | DATASERVER(state=ONLINE) | | CONNECTIONS(created=54, active=0) | +----------------------------------------------------------------------------+ +----------------------------------------------------------------------------+ |qa.tx2.continuent.com(slave:ONLINE, progress=1397, latency=0.000) | |STATUS [OK] [2012/03/05 11:02:19 PM CET] | +----------------------------------------------------------------------------+ | MANAGER(state=ONLINE) | | REPLICATOR(role=slave, master=qa.tx1.continuent.com, state=ONLINE) | | DATASERVER(state=ONLINE) | | CONNECTIONS(created=0, active=0) | +----------------------------------------------------------------------------+ +----------------------------------------------------------------------------+ |qa.tx3.continuent.com(slave:ONLINE, progress=1397, latency=0.000) | |STATUS [OK] [2012/03/05 10:58:31 PM CET] | +----------------------------------------------------------------------------+ | MANAGER(state=ONLINE) | | REPLICATOR(role=slave, master=qa.tx1.continuent.com, state=ONLINE) | | DATASERVER(state=ONLINE) | | CONNECTIONS(created=0, active=0) | +----------------------------------------------------------------------------+There is a master and two slaves. For each server, we can see the vitals at a glance. The relay site offers a similar view, with the distinction that, instead of a master, there is a relay server. All changes coming from the master in the main site will also go to the relay server, and from that to the slaves in the second site.

[LOGICAL:EXPERT] /nyc > use sjc [LOGICAL:EXPERT] /sjc > ls COORDINATOR[qa.tx6.continuent.com:AUTOMATIC:ONLINE] DATASOURCES: +----------------------------------------------------------------------------+ |qa.tx6.continuent.com(relay:ONLINE, progress=1397, THL latency=4.456) | |STATUS [OK] [2012/03/05 10:58:32 PM CET] | +----------------------------------------------------------------------------+ | MANAGER(state=ONLINE) | | REPLICATOR(role=relay, master=qa.tx1.continuent.com, state=ONLINE) | | DATASERVER(state=ONLINE) | | CONNECTIONS(created=0, active=0) | +----------------------------------------------------------------------------+ +----------------------------------------------------------------------------+ |qa.tx7.continuent.com(slave:ONLINE, progress=1397, latency=0.000) | |STATUS [OK] [2012/03/05 10:59:03 PM CET] | +----------------------------------------------------------------------------+ | MANAGER(state=ONLINE) | | REPLICATOR(role=slave, master=qa.tx6.continuent.com, state=ONLINE) | | DATASERVER(state=ONLINE) | | CONNECTIONS(created=0, active=0) | +----------------------------------------------------------------------------+ +----------------------------------------------------------------------------+ |qa.tx8.continuent.com(slave:ONLINE, progress=1397, latency=0.000) | |STATUS [OK] [2012/03/05 10:58:30 PM CET] | +----------------------------------------------------------------------------+ | MANAGER(state=ONLINE) | | REPLICATOR(role=slave, master=qa.tx6.continuent.com, state=ONLINE) | | DATASERVER(state=ONLINE) | | CONNECTIONS(created=0, active=0) | +----------------------------------------------------------------------------+If you need to bring the relay site as the main one, all you need to do is to run a switch command:

[LOGICAL:EXPERT] /sjc > use great_company [LOGICAL:EXPERT] /great_company > switch SELECTED SLAVE: 'sjc@great_company' FLUSHING TRANSACTIONS THROUGH 'qa.tx1.continuent.com@nyc' PUT THE NEW MASTER 'sjc@great_company' ONLINE PUT THE PRIOR MASTER 'nyc@great_company' ONLINE AS A SLAVE SWITCH TO 'sjc@great_company' WAS SUCCESSFUL [LOGICAL:EXPERT] /great_company > ls COORDINATOR[qa.tx6.continuent.com:AUTOMATIC:ONLINE] DATASOURCES: +----------------------------------------------------------------------------+ |nyc(composite slave:ONLINE) | |STATUS [OK] [2012/03/06 09:44:48 AM CET] | +----------------------------------------------------------------------------+ +----------------------------------------------------------------------------+ |sjc(composite master:ONLINE) | |STATUS [OK] [2012/03/06 09:44:47 AM CET] | +----------------------------------------------------------------------------+If disaster strikes, instead of "switch" you say failover, and then use the relay site transparently. Did I mention that Tungsten Connector can be configured to use a composite data service transparently? It can, and if you switch your operations from one Coast to another, the applications will follow suit without any manual intervention. It's so cool! I am sure any geek must love it! BTW: this is not a toy application. This suite is handling data centers that are huge by any standard you care to use, with 100+ terabyte moved through this technology.

No comments:

Post a Comment