Multi master topologies blues

Tungsten Replicator is a powerful replication engine that, in addition to providing the same features as MySQL Replication, can also create several topologies, such as

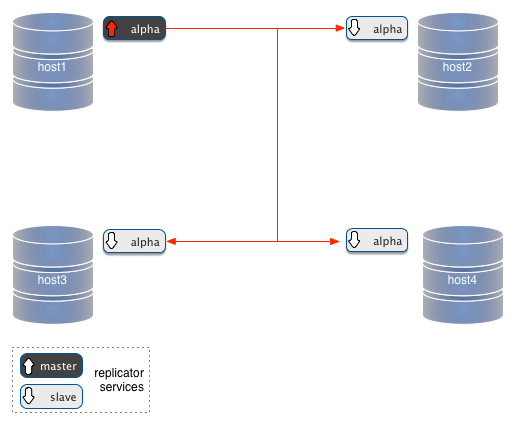

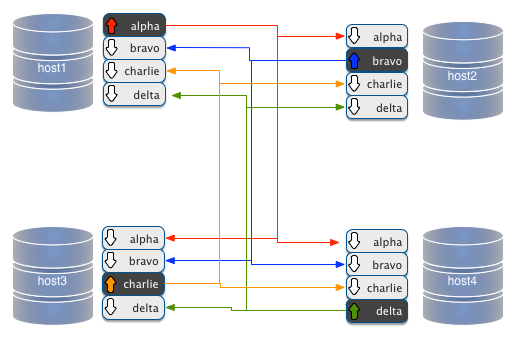

- all-masters: every master in the deployment is a master, and all nodes are connected point-to-point, so that there is no single point of failure (SPOF).

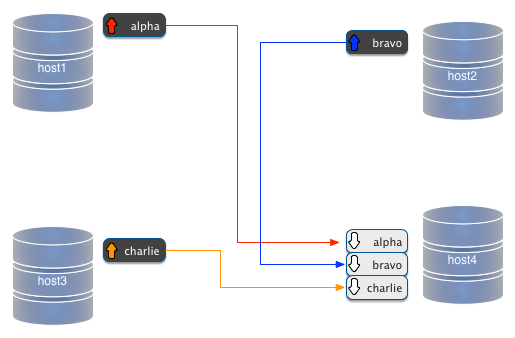

- fan-in: Several masters can replicate into a single slave;

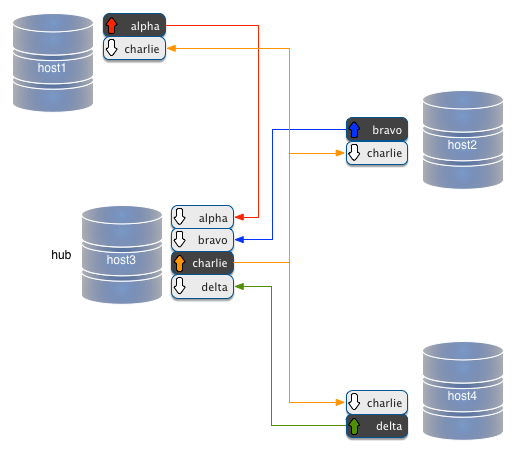

- star: It’s an all-masters topology, where one node acts as hub which simplifies the deployment at the price of creating a SPOF.

The real weakness of these topologies is that they don’t come together easily. Installation requires several commands, and running them unassisted is a daunting task. Some time ago, we introduced a set of scripts (the Tungsten Cookbook) that allow you to install multi-master topologies with a single command. Of course, the single command is just a shell script that creates and runs all the commands needed for the deployment. The real downer is the installation time. For an all-masters topology with 4 nodes, you need 17 operations, which require a total of about 8 minutes. Until today, we have complex operations, and quite slow.

Meet The TPM

Notice: these examples require a recent night build of Tungsten Replicator (e.g. 2.1.1-120), which you can download from http://bit.ly/tr_21_builds

But technology advances. The current tungsten-installer, the tool that installs Tungsten-Replicator instances, has evolved into a tool that has been used for long time to install our flagship product, Continuent Tungsten (formerly known as ‘Tungsten Enterprise’). The ‘tpm’ (Tungsten Package Manager) has outsmarted its name, as it does way more than managing packages, and actually provides a first class installation experience. Among other things, it provides hundreds of validation checks, to make sure that the operating system, the network, and the database servers are fit for the installation. Not only that, but it installs all components, in all servers in parallel.

So users of our commercial solution have been enjoying this more advanced installation method for quite a long time, and the tpm itself has improved its features, becoming able to install single Tungsten Replicator instances, in addition to the more complex HA clusters. Looking at the tool a few weeks ago, we realized that tpm is so advanced that it could easily support Tungsten Replicator topologies with minimal additions. And eventually, we have it!

The latest nightly builds of Tungsten Replicator include the ability of installing multi-master topologies using tpm. Now, not only you can perform these installation tasks using the cookbook recipes, but the commands are so easy that you can actually run them without help from shell scripts.

Let’s start with the plain master/slave installation (Listing 1). The command looks similar to the one using tungsten-installer. The syntax has been simplified a bit. We say members instead of cluster-hosts, master instead of master-host, replication-user and replication-password instead of datasource-user and datasource-password. And looking at this command, it does not seem worth the effort to use a new syntax just to save a few keystrokes.

./tools/tpm install alpha \

--topology=master-slave \

--home-directory=/opt/continuent/replicator \

--replication-user=tungsten \

--replication-password=secret \

--master=host1 \

--slaves=host2,host3,host4 \

--start

Listing 1: master/slave installation.

However, the real bargain starts appearing when we compare the installation time. Even for this fairly simple installation, which ran in less than 2 minutes with tungsten-installer, we get a significant gain. The installation now runs in about 30 seconds.

Where we see the most important advantages, though, is when we want to run multiple masters deployments. The all-masters installation command, lasting 8 minutes, which I mentioned a few paragraphs above? Using tpm, now runs in 45 seconds, and it is one command only. Let’s have a look

./tools/tpm install four_musketeers \

--topology=all-masters \

--home-directory=/opt/continuent/replicator \

--replication-user=tungsten \

--replication-password=secret \

--masters=host1,host2,host3,host4 \

--master-services=alpha,bravo,charlie,delta \

--start

Listing 2: all-masters installation.

It’s worth observing this new compact command line by line:

- ./tools/tpm install four_musketeers: This command calls tpm with the ‘install’ mode, to the entity ‘four_musketeers’. This thing is a data service, which users of other Tungsten products and readers of Robert Hodges blog will recognize as a more precise definition of what we commonly refer to as ‘a cluster.’ Anyway, this data service appears in the installation and, so far, does not have much to say within the replicator usage. So just acknowledge that you can name this entity as you wish, and it does not affect much of the following tasks.

- –topology=all-masters: Some of the inner working of the installer depend on this directive, which tells the tpm what kind of topology to expect. If you remember what we needed to do with tungsten-installer + configure-service, you will have some ideas of what this directive tells tpm to do and what you are spared now.

- –home-directory=/opt/continuent/replicator: Nothing fancy here. This is the place where we want to install Tungsten.

- –replication-user=tungsten: It’s the database user that will take care of the replication.

- –replication-password=secret: The password for the above user;

- –masters=host1,host2,host3,host4: This is the list of nodes where a master is deployed. In the case of an all-masters topology, there is no need of listing the slaves: by definition, every host will have a slave service for the remaining masters.

- –master-services=alpha,bravo,charlie,delta: This is the list of service names that we will use for our topology. We can use any names we want, including the host names or the names of your favorite superheroes.

- –start: with this, the replicator will start running immediately after the deployment.

This command produces, in 45 seconds, the same deployment that you get with tungsten-installer in about 8 minutes.

The command is so simple that you could use it without assistance. However, if you like the idea of Tungsten Cookbook assembling your commands and running them, giving you access to several commodity utilities in the process, you can do it right now. Besides, if you need to customize your installation with ports, custom paths and management tools, you will appreciate the help provided by Tungsten Cookbook.

# (edit ./cookbook/USER_VALUES.sh)

export USE_TPM=1

./cookbook/install_all_masters

Listing 3: invoking tpm installation for all-masters using a cookbook recipe.

When you define USE_TPM, the installation recipe will use tpm instead of tungsten-installer. Regardless of the verbosity that you have chosen, you realize that you are using the tpm because the installation is over very soon.

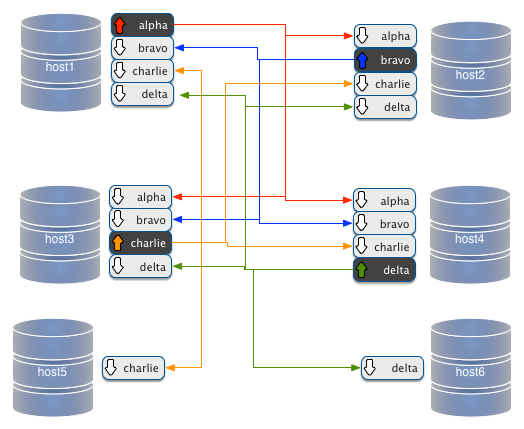

The above command (either the one done manually or the built-in recipe) will produce a data service with four nodes, all of which are masters, and you can visualize them as:

./cookbook/show_cluster

--------------------------------------------------------------------------------------

Topology: 'ALL_MASTERS'

--------------------------------------------------------------------------------------

# node host1

alpha [master] seqno: 15 - latency: 0.058 - ONLINE

bravo [slave] seqno: 15 - latency: 0.219 - ONLINE

charlie [slave] seqno: 15 - latency: 0.166 - ONLINE

delta [slave] seqno: 15 - latency: 1.161 - ONLINE

# node host2

alpha [slave] seqno: 15 - latency: 0.100 - ONLINE

bravo [master] seqno: 15 - latency: 0.179 - ONLINE

charlie [slave] seqno: 15 - latency: 0.179 - ONLINE

delta [slave] seqno: 15 - latency: 1.275 - ONLINE

# node host3

alpha [slave] seqno: 15 - latency: 0.093 - ONLINE

bravo [slave] seqno: 15 - latency: 0.245 - ONLINE

charlie [master] seqno: 15 - latency: 0.099 - ONLINE

delta [slave] seqno: 15 - latency: 1.198 - ONLINE

# node host4

alpha [slave] seqno: 15 - latency: 0.145 - ONLINE

bravo [slave] seqno: 15 - latency: 0.256 - ONLINE

charlie [slave] seqno: 15 - latency: 0.208 - ONLINE

delta [master] seqno: 15 - latency: 0.371 - ONLINE

Listing 4: The cluster overview after an all-masters installation.

More topologies: fan-in

Here is the command that installs three masters in host1,host2, and host3, all fanning in to host4, which will only have 3 slave services, and no master.

./tools/tpm install many_towns \

--replication-user=tungsten \

--replication-password=secret \

--home-directory=/opt/continuent/replication \

--masters=host1,host2,host3 \

--slaves=host4 \

--master-services=alpha,bravo,charlie \

--topology=fan-in \

--start

Listing 5: Installing a fan-in topology.

You will notice that it’s quite similar to the installation of all-masters. The most notable difference is that, in addition to the list of msters, the list of masters, there is also a list of slaves.

--masters=host1,host2,host3 \

--slaves=host4 \

Listing 6: How a fan-in topology is defined.

We have three masters, and one slave listed. We could modify the installation command this way, and we would have two fan-in slaves getting data from two masters.

--masters=host1,host2 \

--slaves=host3,host4 \

#

# The same as:

#

--masters=host1,host2 \

--members=host1,host2,host3,host4 \

Listing 7: Reducing the number of masters increases the slaves in a fan-in topology.

Now we will have two masters in host1 and host2, and two fan-in slaves in host3 and host4.

If we remove another master from the list, we will end up with a simple master/slave topology.

And a star

The most difficult topology is the star, where all nodes are masters and a node acts as a hub between each endpoint and the others.

./tools/tpm install constellation \

--replication-user=tungsten \

--replication-password=secret \

--home-directory=/opt/continuent/replication \

--masters=host1,host2,host4 \

--hub=host3 \

--hub-service=charlie \

--master-services=alpha,bravo,delta \

--topology=star \

--start

Listing 8: Installing a star topology.

Now the only complication about this topology is that it requires two more parameters than all-masters or fan-in. We need to define which node is the hub, and how to name the hub service. But this topology has the same features of the one that you could get by running 11 commands with tungsten-installer + configure-service.

More TPM: building complex clusters

The one-command installation is just one of tpm many features. Its real power resides in its ability of composing more complex topologies. The ones shown above are complex, and since they are common there are one-command recipes that simplify their deployment. But there are cases when we want to deploy beyond these well known topologies, and compose our own cluster. For example, we want an all-masters topology with two additional simple slaves attached to two of the masters. To compose a custom topology, we can use tpm in stages. We configure the options that are common to the whole deployment, and then we shape up each component of the cluster.

#1

./tools/tpm configure defaults \

--reset \

--replication-user=tungsten \

--replication-password=secret \

--home-directory=/home/tungsten/installs/cookbook \

--start

#2

./tools/tpm configure four_musketeers \

--masters=host1,host2,host3,host4 \

--master-services=alpha,bravo,charlie,delta \

--topology=all-masters

#3

./tools/tpm configure charlie \

--hosts=host3,host5 \

--slaves=host5 \

--master=host3

#4

./tools/tpm configure delta \

--hosts=host4,host6 \

--slaves=host6 \

--master=host4

#5

./tools/tpm install

Listing 9: A composite tpm command.

In Listing 9, we have 5 tpm commands, all of which constitute a composite deployment order. In segment #1, we tell tpm the options that apply to all the next commands, so we won’t have to repeat them. In segment #2, we define the same 4 masters topology that we did in Listing 2. Segments #3 and #4 will create a slave service each on hosts host5 and host6, with the respective masters being in host3 and host4. The final segment #5 tells tpm to take all the information created with the previous command, and finally run the installation. You may be wondering how the tpm will keep track of all the commands, and recognize that they belong to the same deployment. What happens after every command is that the tpm adds information to a file named deploy.cfg, containing a JSON record of the configuration we are building. Since we may have previous attempts at deploying from the same place, we add the option –reset to our first command, thus making sure that we start a new topology, rather than adding to a previous one (which indeed we do when we want to update an existing data service).

The result is what you get in the following image:

A word of caution about the above topology. The slaves in host5 and host6 will only get the changes originated in their respective masters. Therefore, host5 will only get changes that were originated in host4, while host6 will only get changes from host4. If a change comes from host1 or host2, they will be propagated to host1 to host4, because each host has a dedicated communication link to each of the other masters, but the data does not pass through to the single slaves.

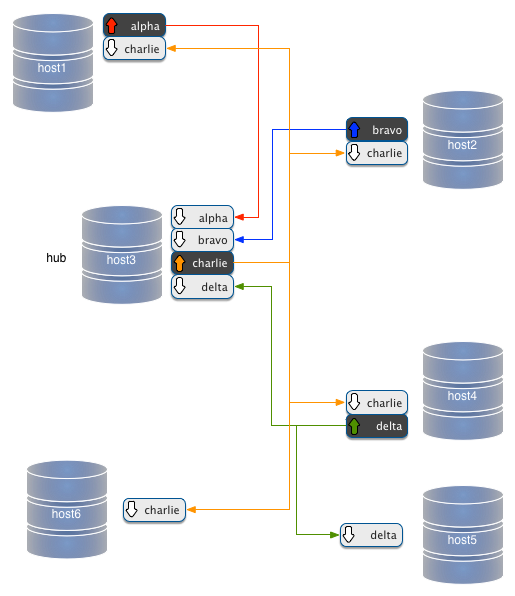

Different is the case when we add slave nodes to a star topology, as in the following example.

./tools/tpm configure defaults \

--reset \

--replication-user=tungsten \

--replication-password=secret \

--home-directory=/home/tungsten/installs/cookbook \

--start

./tools/tpm configure constellation \

--masters=host1,host2,host3,host4 \

--master-services=alpha,bravo,delta \

--hub=host3 \

--hub-service=charlie \

--topology=star

./tools/tpm configure charlie \

--hosts=host3,host5 \

--slaves=host5 \

--master=host3

./tools/tpm configure delta \

--hosts=host4,host6 \

--slaves=host6 \

--master=host4

./tools/tpm install

In a star topology, the hub is a pass-through master. Everything that is applied to this node is saved to binary logs, and put back in circulation. In this extended topology, the slave service in host5 is attached to a spoke of the star. Thus, it will get only changes that were created in its master. Instead, the node in host6, which is attached to the hub master, will get all the changes coming from any node.

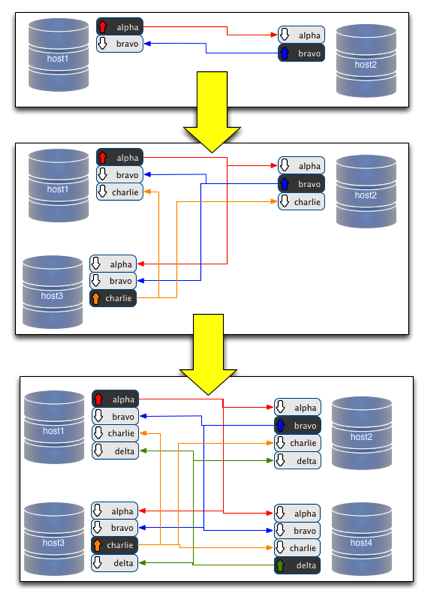

Extending clusters

So far, the biggest challenge when working with multi-master topologies has been extending an existing cluster. Starting with two nodes and then expanding it to three is quite a challenging task. (Figure 8)

Using tpm, though, the gask becomes quite easy. Let's revisit the all-masters installation command, similar to what we saw at the start of this article

./tools/tpm install musketeers \

--reset \

--topology=all-masters \

--home-directory=/opt/continuent/replicator \

--replication-user=tungsten \

--replication-password=secret \

--masters=host1,host2,host3 \

--master-services=athos,porthos,aramis \

--start

If we want to add a host 'host4', running a service called 'dartagnan', we just have to modify the above command slightly:

./tools/tpm configure musketeers \

--reset \

--topology=all-masters \

--home-directory=/opt/continuent/replicator \

--replication-user=tungsten \

--replication-password=secret \

--masters=host1,host2,host3,host4 \

--master-services=athos,porthos,aramis,dartagnan \

--start

./tools/tpm update

That's all it takes. The update command is almost a repetition of the install command, with the additional components. The same command also restarts the replicators, to get the configuration online.

More is coming

The tpm is such a complex tool that exploring it all in one session may be daunting. In addition to installing, you can update the data service, and thanks to its precise syntax, you can deploy the change exactly in the spot where you want it, without moving from the staging directory. We will look at it with more examples soon.